# Dashboard

Source: https://docs.prompteus.com/features/dashboard

Monitor your Neurons' performance, usage, and cost savings through the Prompteus dashboard.

The Prompteus dashboard provides real-time insights into your Neurons' performance, usage metrics, and cost savings. This guide explains how to interpret the dashboard metrics and use them to optimize your Neurons.

## Dashboard Overview

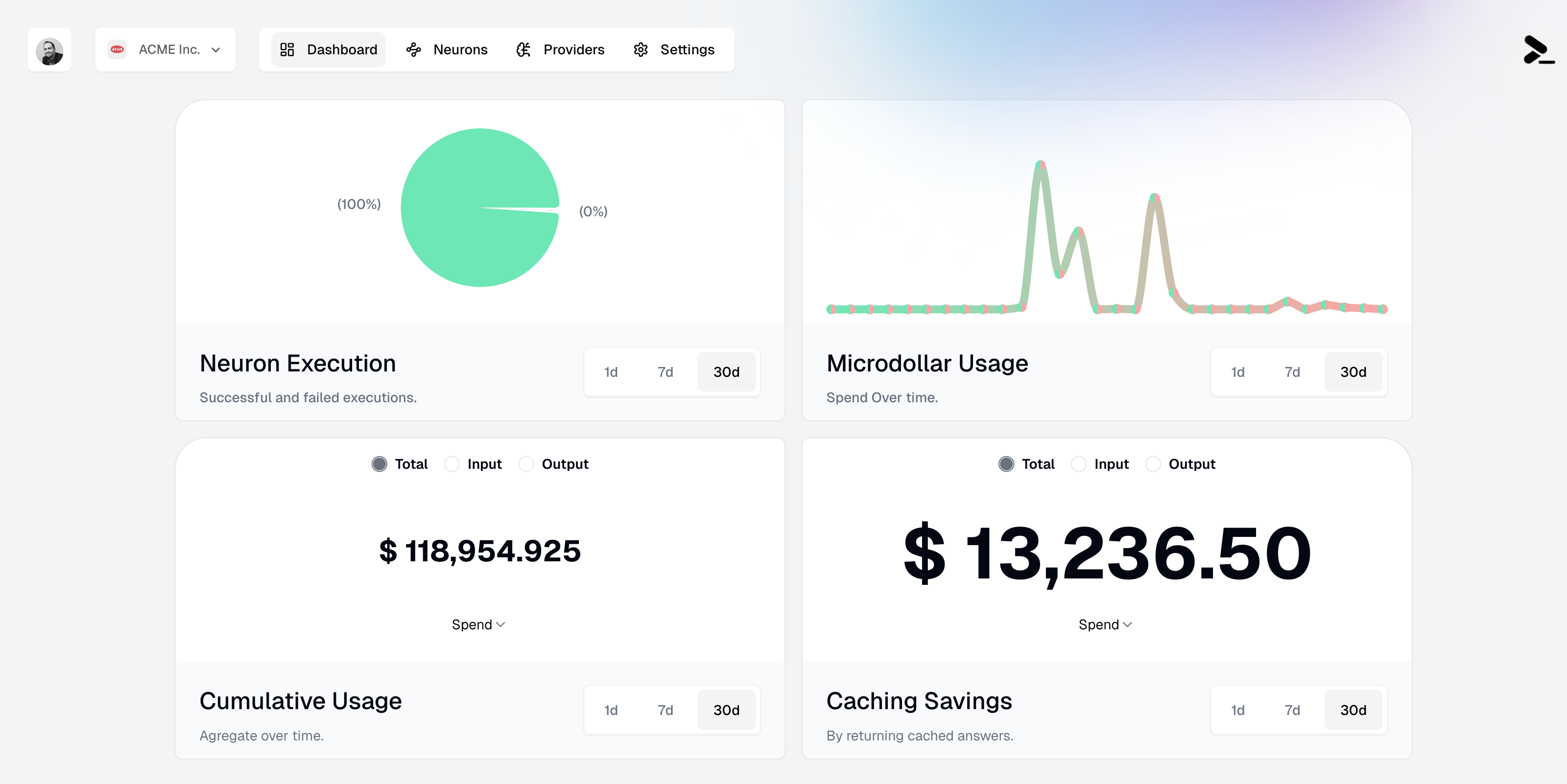

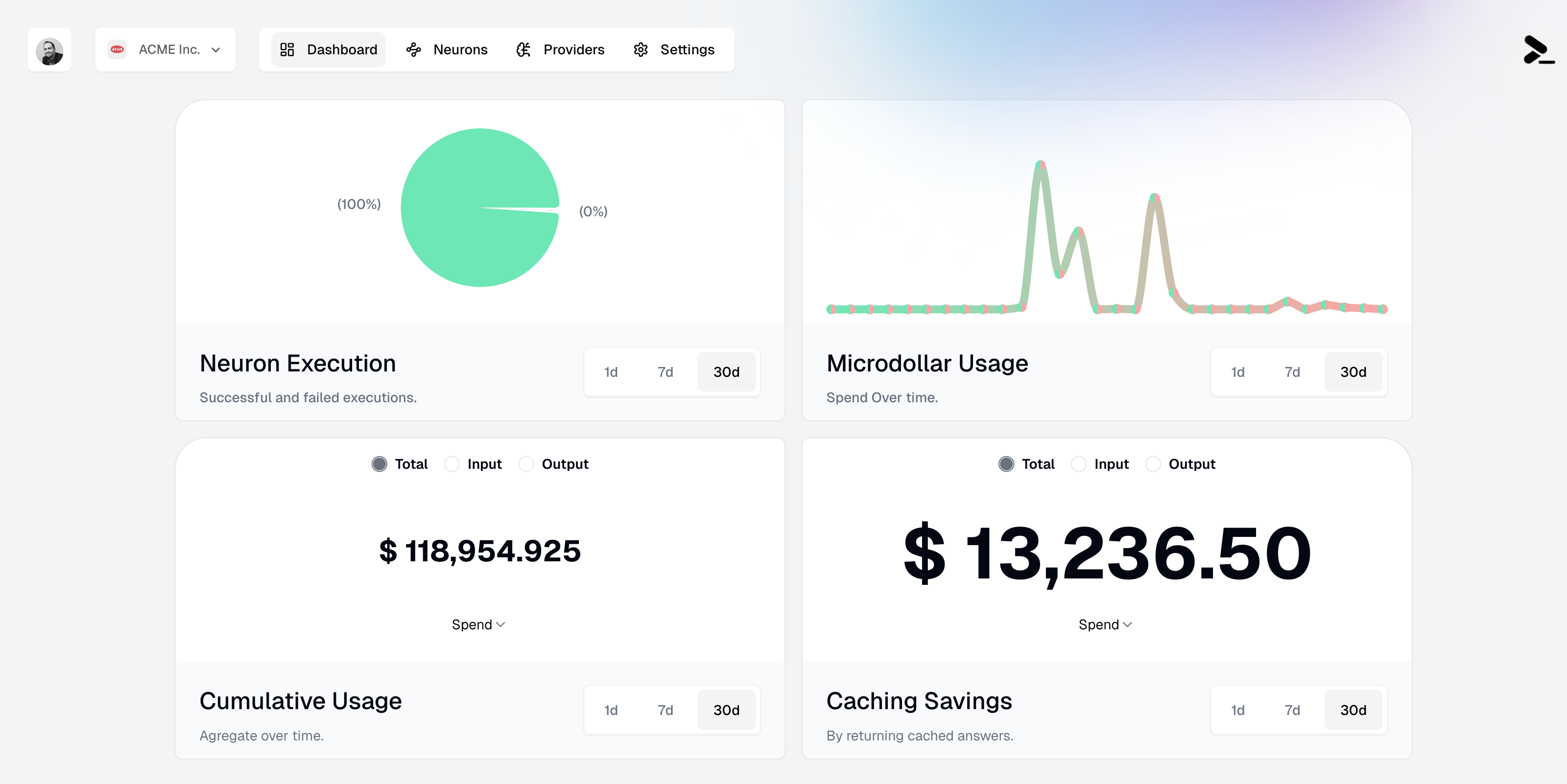

The dashboard consists of four main panels that give you a comprehensive view of your Neurons' performance and costs:

### Neuron Execution

The Neuron Execution panel shows the success rate of your Neuron executions through a pie chart visualization. It displays:

* Successful executions (green)

* Failed executions (if any, in red)

* Time range filters: 1d, 7d, and 30d

This metric helps you monitor the reliability of your Neurons and quickly identify if there are any issues that need attention.

### Microdollar Usage

The Microdollar Usage panel shows your spending over time. A microdollar (μ\$) is one millionth of a dollar, allowing for precise tracking of API costs at a very granular level. The graph shows:

* Usage patterns over time

* Spending spikes and trends

* Time range filters: 1d, 7d, and 30d

Microdollars (μ\$) are used to track costs with high precision. For example:

* μ\$1,000,000 = \$1.00

* μ\$100,000 = \$0.10

* μ\$1,000 = \$0.001

### Cumulative Usage

The Cumulative Usage panel tracks your total resource consumption, with options to view:

* Total tokens processed

* Input tokens

* Output tokens

* Total spend

* Time range filters: 1d, 7d, and 30d

For better readability, the dashboard automatically switches between dollars (\$) and microdollars (μ\$) based on the amount:

* Large amounts (≥ \$1.00) are shown in dollars (e.g., \$118,954.925)

* Small amounts (\< \$1.00) are shown in microdollars (e.g., μ\$100,000)

This helps you understand your overall usage patterns and plan capacity accordingly.

### Caching Savings

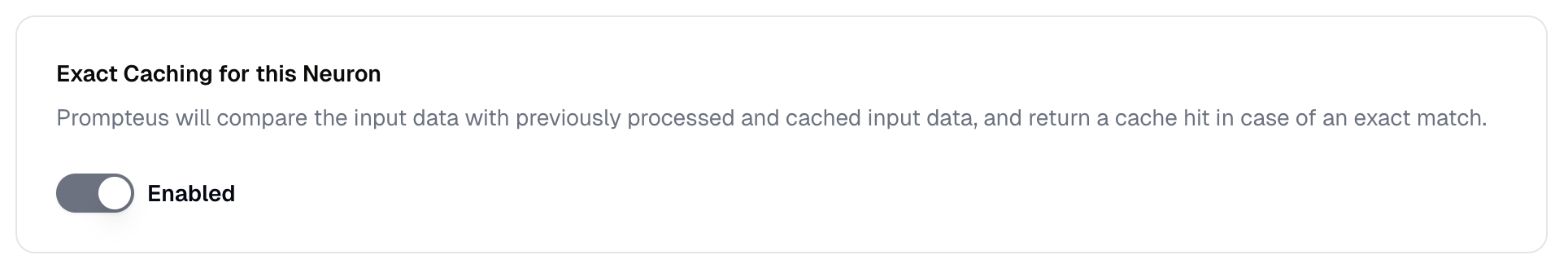

The Caching Savings panel shows how much you've saved through [caching](/neurons/settings/caching). It displays:

* Total amount saved through cache hits

* Savings over time graph

* Time range filters: 1d, 7d, and 30d

Like the Cumulative Usage panel, this panel also automatically switches between dollars and microdollars for better readability:

* Significant savings are displayed in dollars (e.g., \$13,236.50)

* Smaller savings are displayed in microdollars (e.g., μ\$13,236)

The automatic unit switching between dollars (\$) and microdollars (μ\$) helps you quickly understand the scale of usage and savings:

* Microdollars (μ\$) provide precision for small amounts

* Dollars (\$) offer familiarity for larger amounts

* The switch happens automatically at the \$0.0001 threshold

## Using Dashboard Insights

Here are some ways to use dashboard insights effectively:

1. **Monitor Performance**

* Track success rates to ensure your Neurons are functioning properly

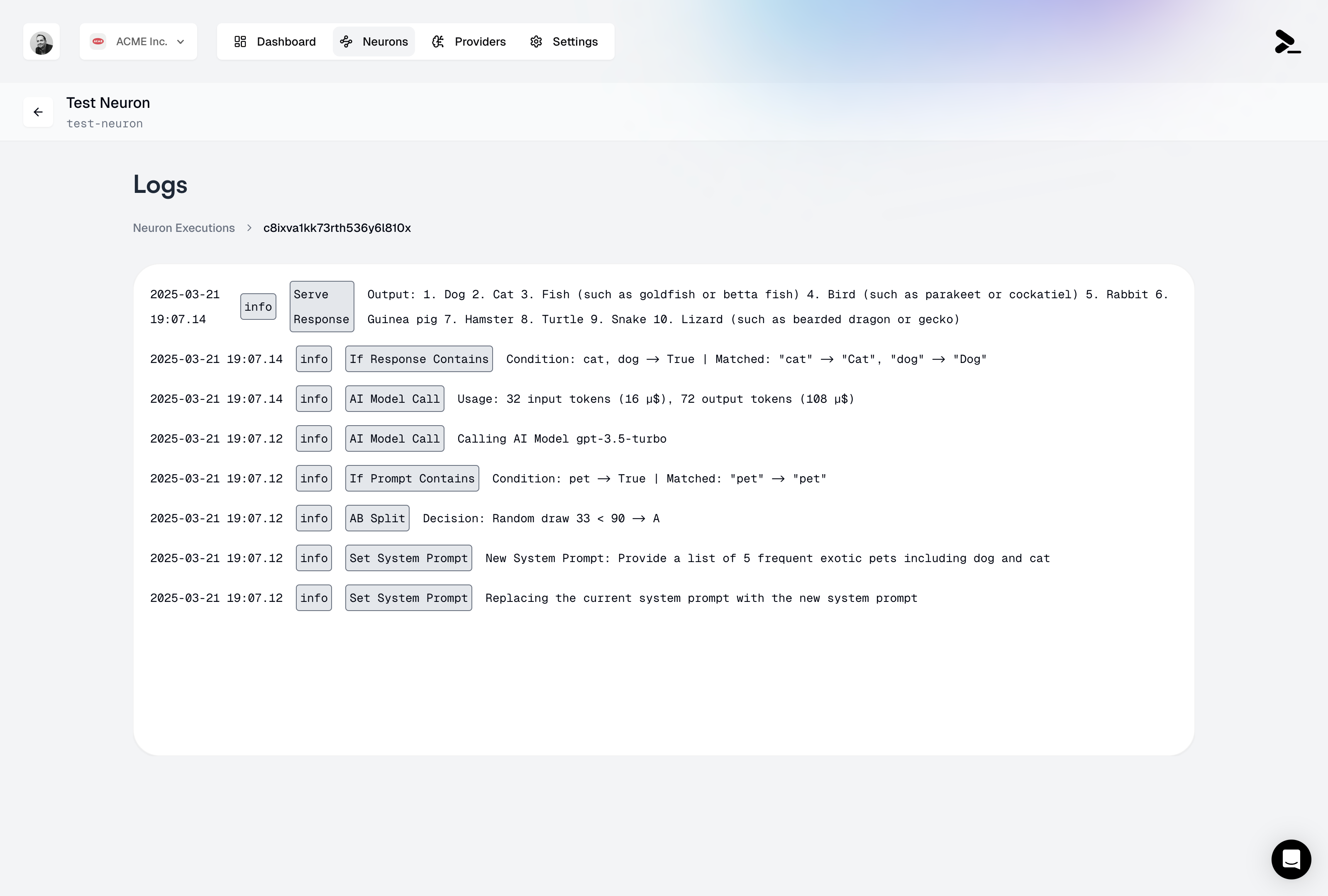

* Identify patterns in failed executions through [detailed execution logs](/neurons/logging)

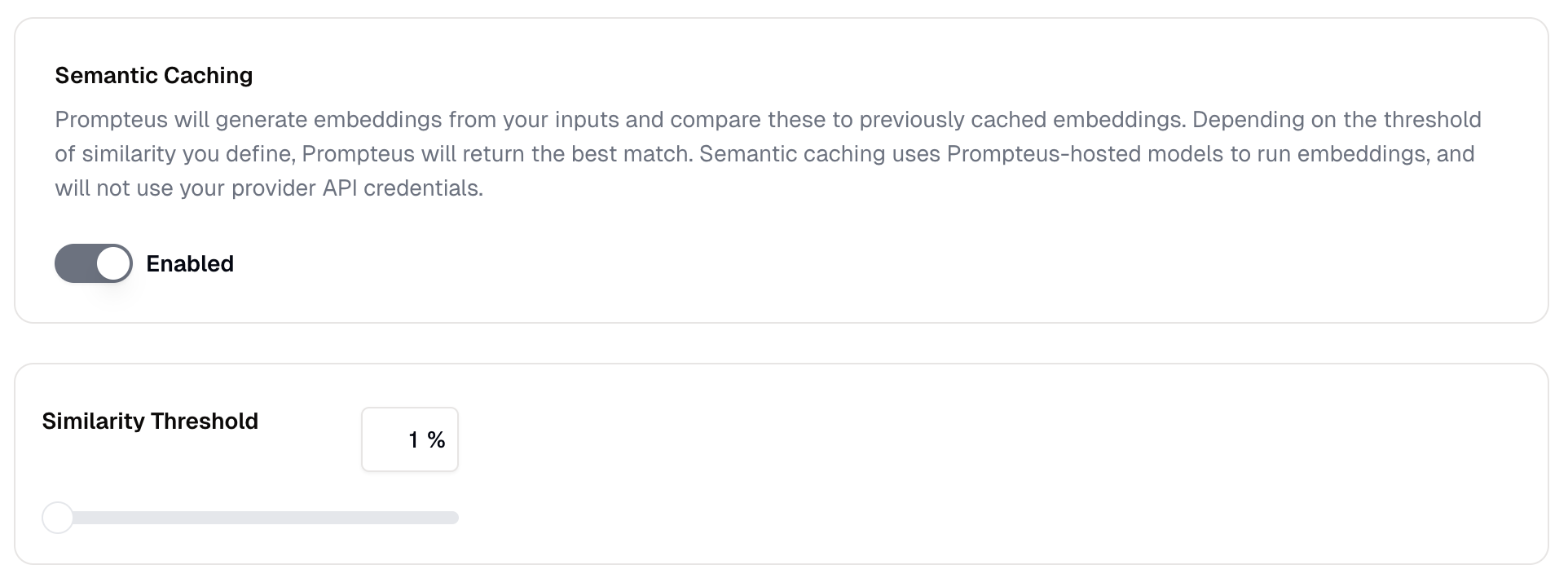

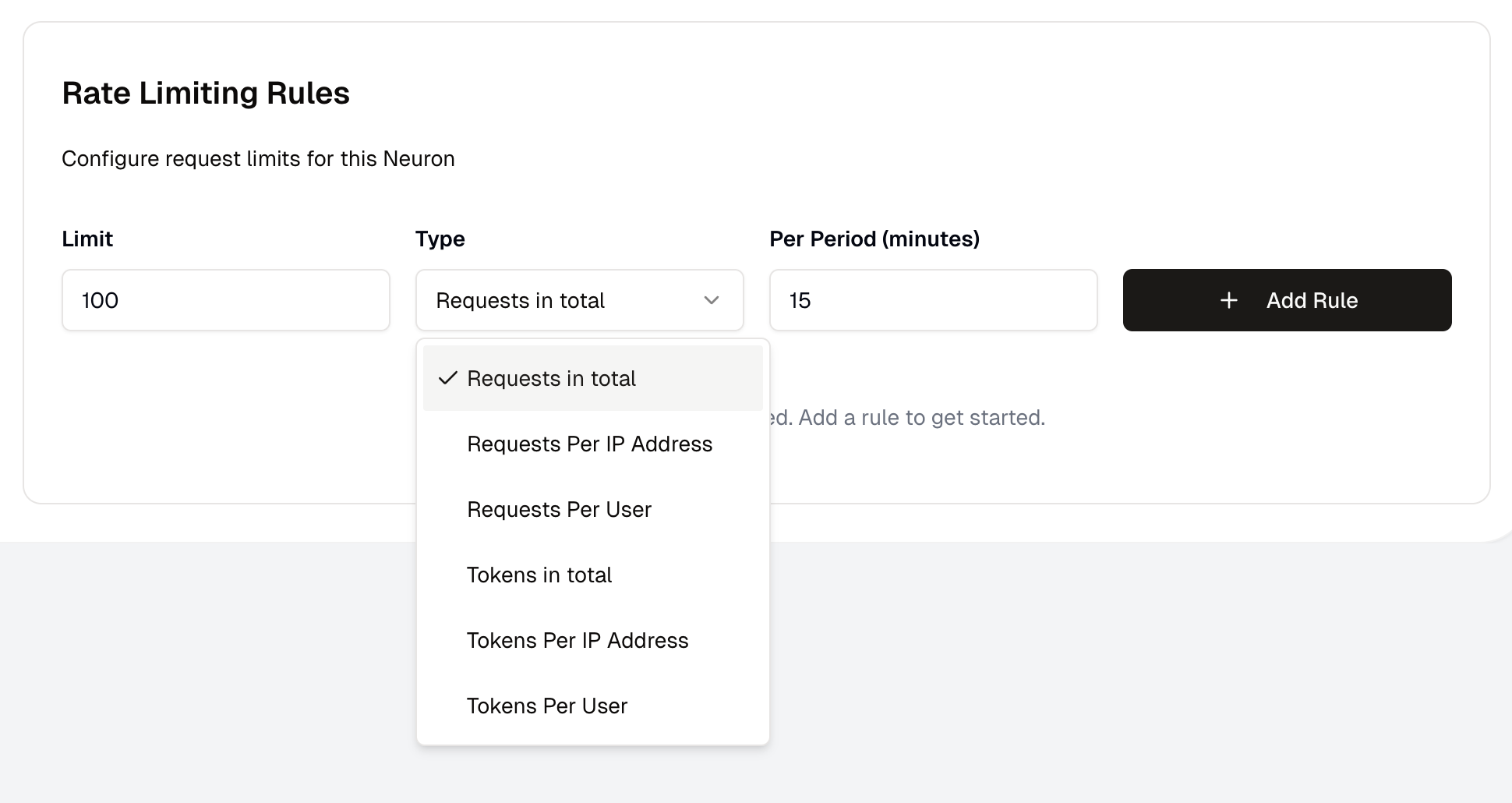

* [Configure rate limiting](/neurons/settings/rate-limiting) based on usage patterns

2. **Optimize Costs**

* Monitor microdollar usage to identify expensive operations

* Track caching savings to validate your [caching strategy](/neurons/settings/caching)

* Adjust [access controls](/neurons/settings/access-control) if you notice unexpected usage patterns

3. **Capacity Planning**

* Use cumulative usage data to forecast future needs

* Identify peak usage periods

* Plan for scaling based on growth trends

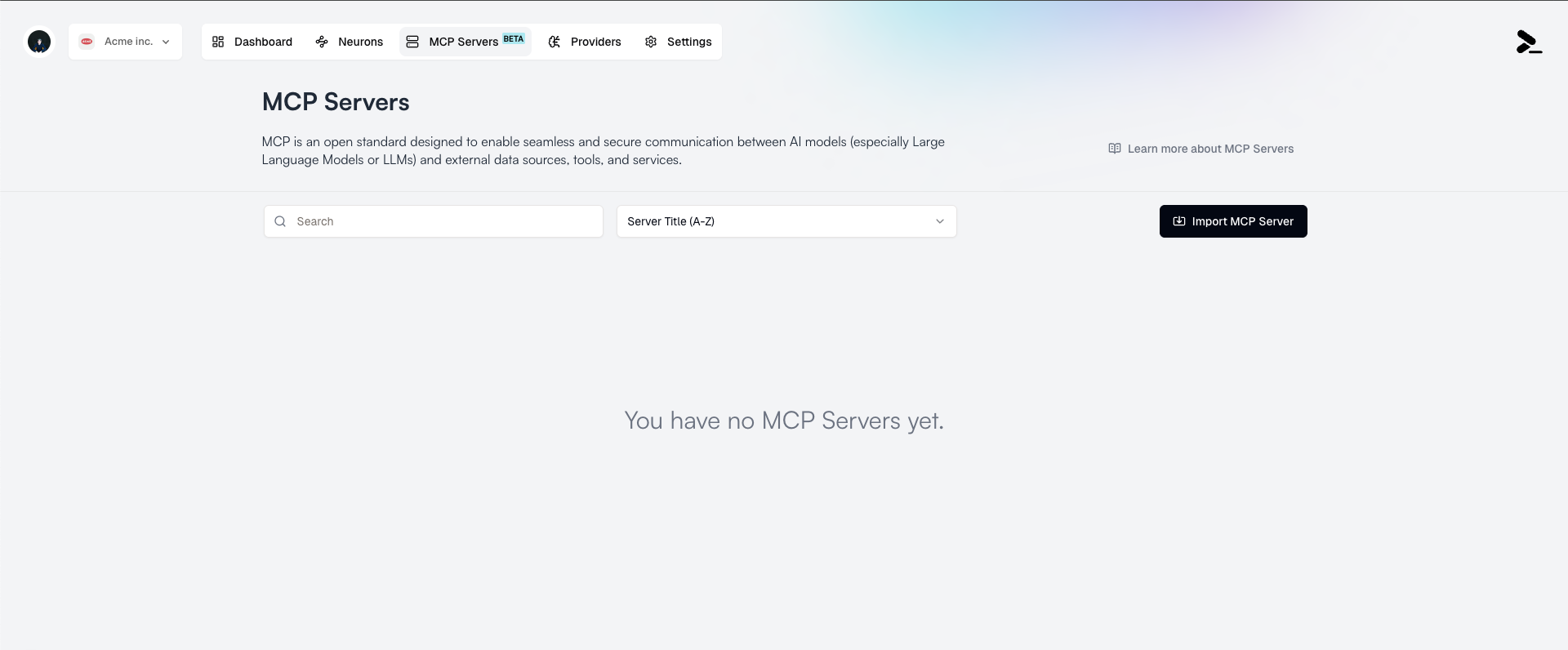

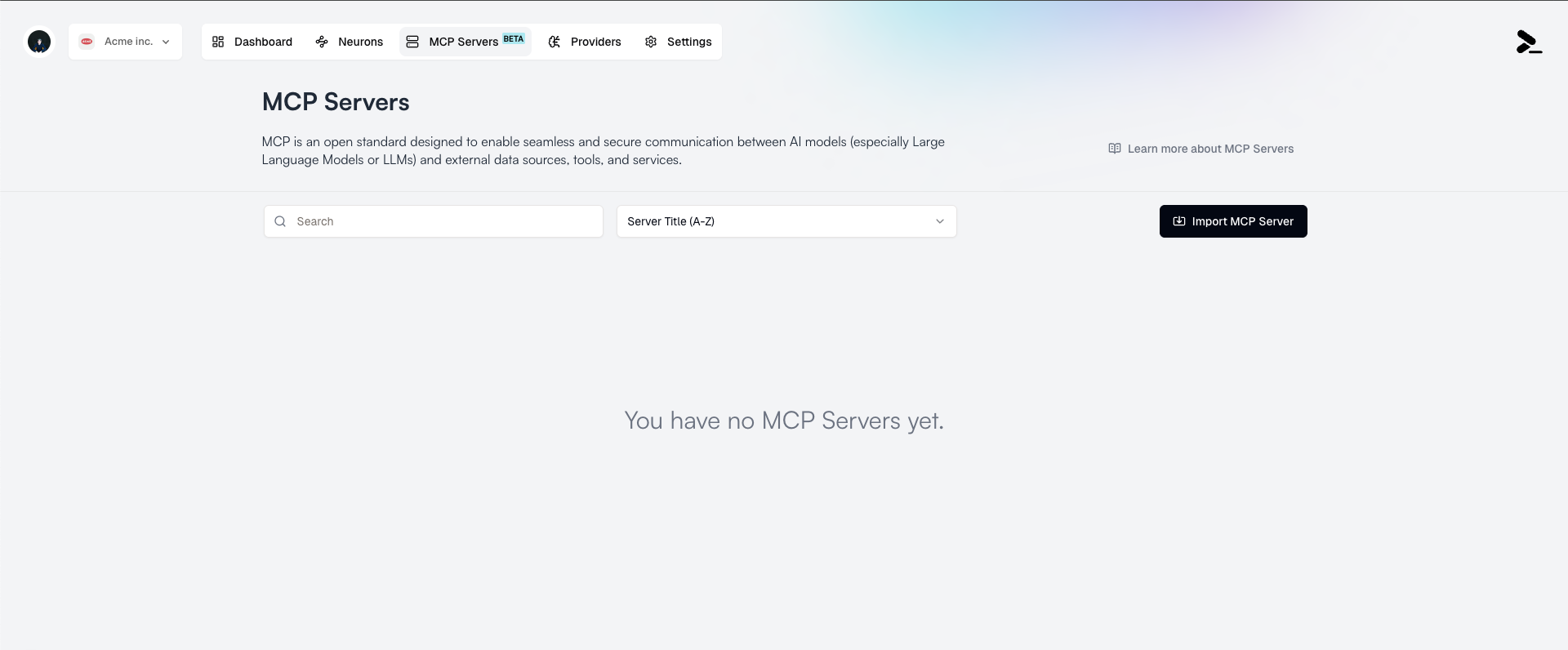

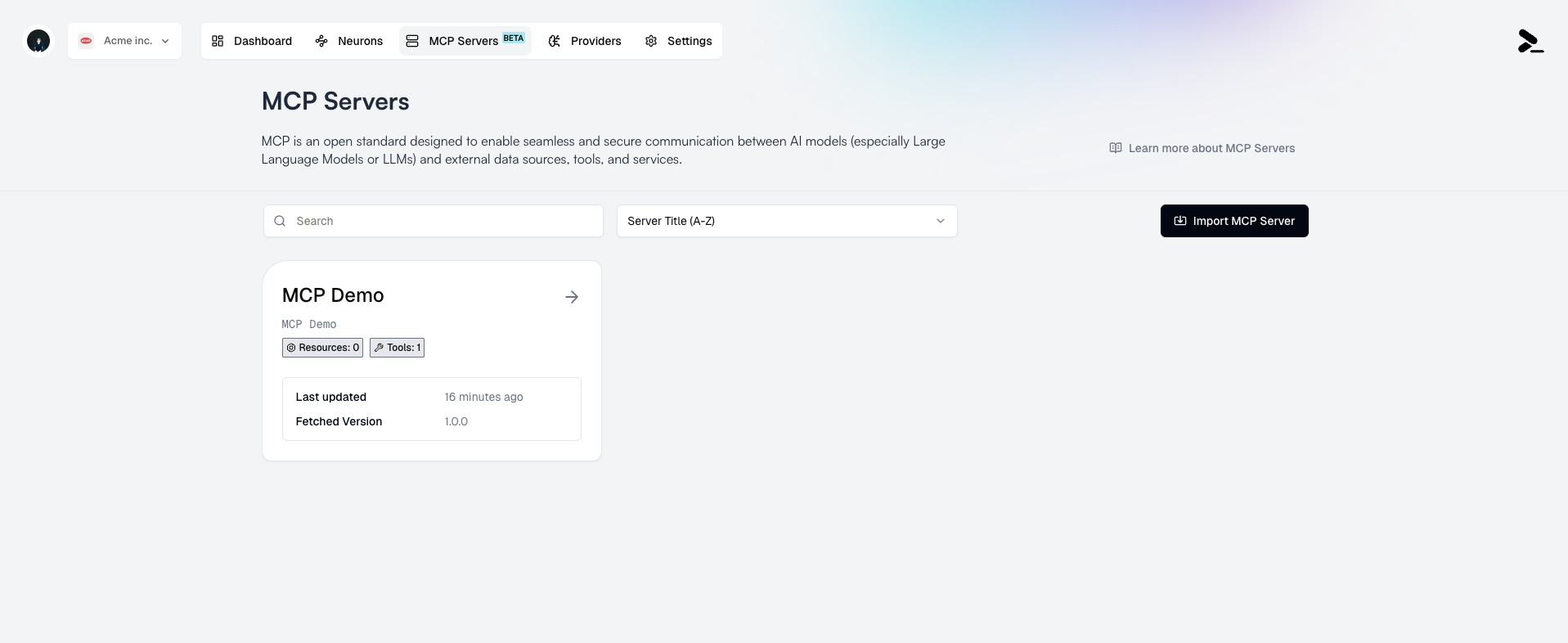

# MCP Servers

Source: https://docs.prompteus.com/features/mcp-servers

Connect remote MCP Servers to access Resources and Tools in your Neurons.

MCP Servers, and specifically the use of remote MCP servers, are nascent

technology subject to rapid and frequent change.

## Introduction

[Model Context Protocol (MCP)](https://modelcontextprotocol.io/introduction) is an open protocol that standardizes how applications provide context to LLMs.

The MCP Servers feature in Prompteus allows users to import and configure remotely hosted MCP Servers over the [Streamable HTTP transport](https://modelcontextprotocol.io/specification/2025-03-26/basic/transports#streamable-http) to access and use [Resources](https://modelcontextprotocol.io/docs/concepts/resources) and [Tools](https://modelcontextprotocol.io/docs/concepts/tools) in your Neurons.

Specification around remote MCP Servers are [still in

development](https://modelcontextprotocol.io/quickstart/server#why-claude-for-desktop-and-not-claude-ai).

In the MCP [General architecture](https://modelcontextprotocol.io/introduction#general-architecture), Prompteus is an MCP Host, and Neurons (and more specifically, the Call AI Model node) within Prompteus are MCP Clients.

## Importing an MCP Server

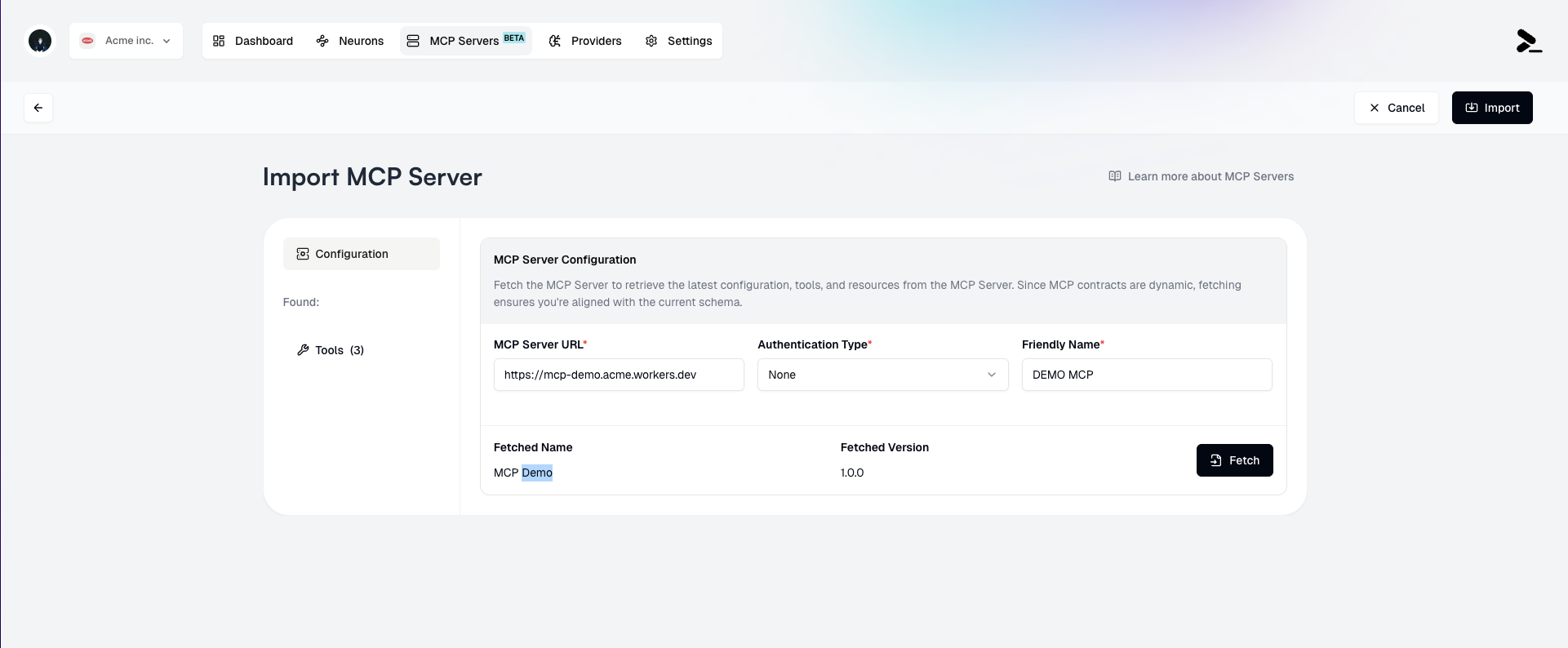

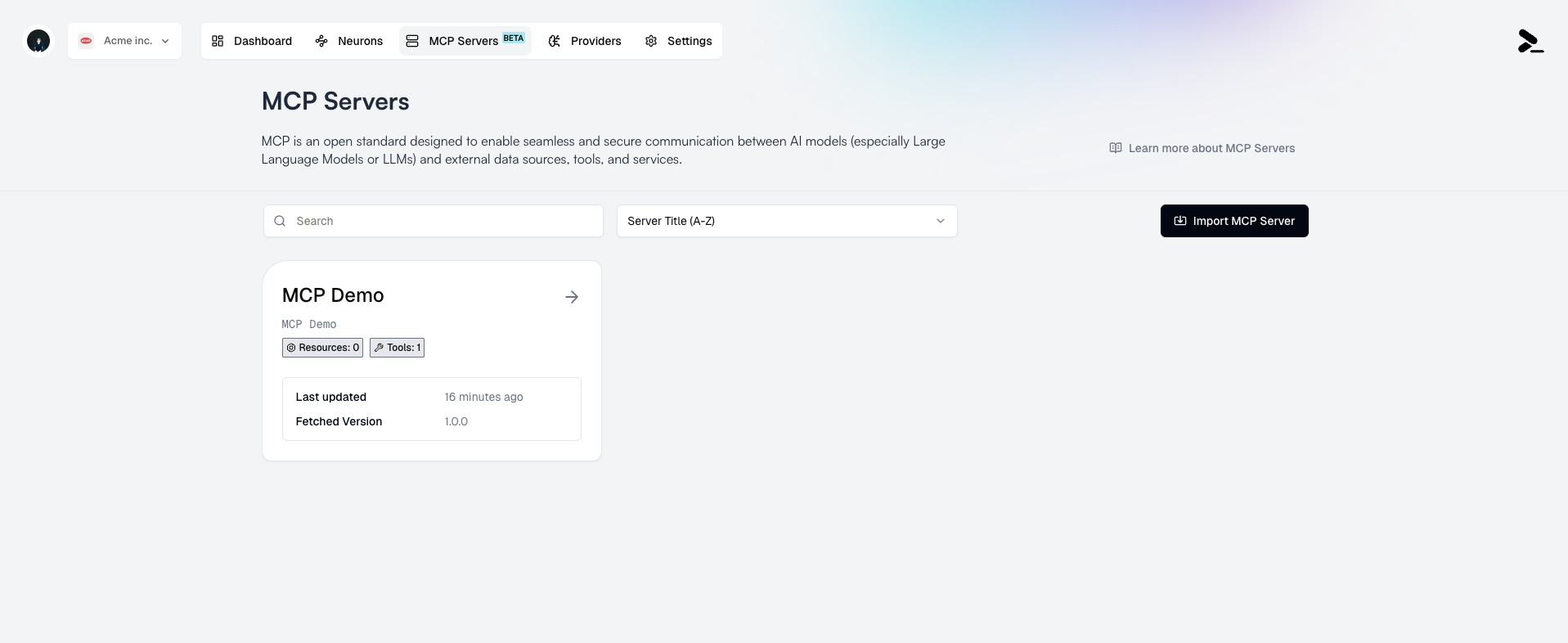

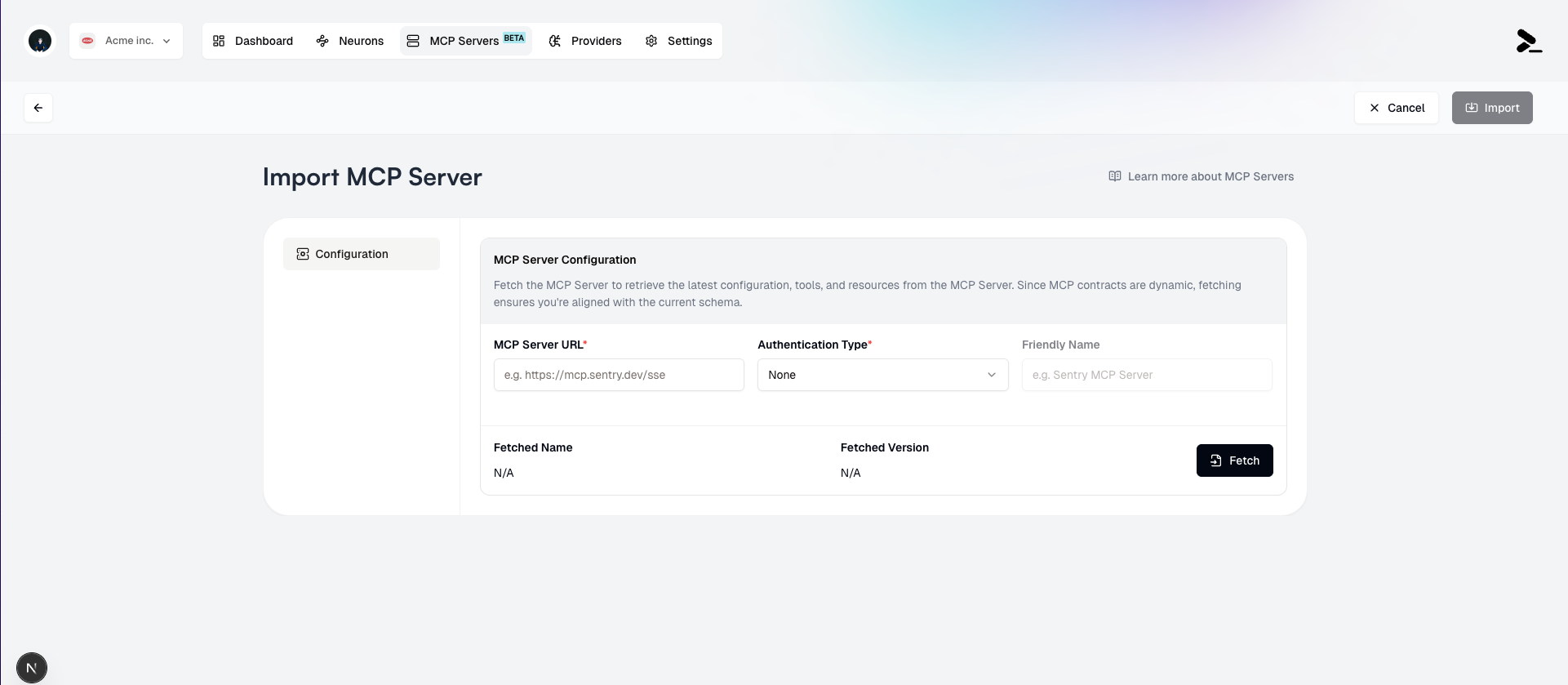

To import an MCP Server in Prompteus:

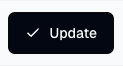

1. Navigate to the MCP Servers page, then click the **Import MCP Server** button.

The dashboard consists of four main panels that give you a comprehensive view of your Neurons' performance and costs:

### Neuron Execution

The Neuron Execution panel shows the success rate of your Neuron executions through a pie chart visualization. It displays:

* Successful executions (green)

* Failed executions (if any, in red)

* Time range filters: 1d, 7d, and 30d

This metric helps you monitor the reliability of your Neurons and quickly identify if there are any issues that need attention.

### Microdollar Usage

The Microdollar Usage panel shows your spending over time. A microdollar (μ\$) is one millionth of a dollar, allowing for precise tracking of API costs at a very granular level. The graph shows:

* Usage patterns over time

* Spending spikes and trends

* Time range filters: 1d, 7d, and 30d

Microdollars (μ\$) are used to track costs with high precision. For example:

* μ\$1,000,000 = \$1.00

* μ\$100,000 = \$0.10

* μ\$1,000 = \$0.001

### Cumulative Usage

The Cumulative Usage panel tracks your total resource consumption, with options to view:

* Total tokens processed

* Input tokens

* Output tokens

* Total spend

* Time range filters: 1d, 7d, and 30d

For better readability, the dashboard automatically switches between dollars (\$) and microdollars (μ\$) based on the amount:

* Large amounts (≥ \$1.00) are shown in dollars (e.g., \$118,954.925)

* Small amounts (\< \$1.00) are shown in microdollars (e.g., μ\$100,000)

This helps you understand your overall usage patterns and plan capacity accordingly.

### Caching Savings

The Caching Savings panel shows how much you've saved through [caching](/neurons/settings/caching). It displays:

* Total amount saved through cache hits

* Savings over time graph

* Time range filters: 1d, 7d, and 30d

Like the Cumulative Usage panel, this panel also automatically switches between dollars and microdollars for better readability:

* Significant savings are displayed in dollars (e.g., \$13,236.50)

* Smaller savings are displayed in microdollars (e.g., μ\$13,236)

The automatic unit switching between dollars (\$) and microdollars (μ\$) helps you quickly understand the scale of usage and savings:

* Microdollars (μ\$) provide precision for small amounts

* Dollars (\$) offer familiarity for larger amounts

* The switch happens automatically at the \$0.0001 threshold

## Using Dashboard Insights

Here are some ways to use dashboard insights effectively:

1. **Monitor Performance**

* Track success rates to ensure your Neurons are functioning properly

* Identify patterns in failed executions through [detailed execution logs](/neurons/logging)

* [Configure rate limiting](/neurons/settings/rate-limiting) based on usage patterns

2. **Optimize Costs**

* Monitor microdollar usage to identify expensive operations

* Track caching savings to validate your [caching strategy](/neurons/settings/caching)

* Adjust [access controls](/neurons/settings/access-control) if you notice unexpected usage patterns

3. **Capacity Planning**

* Use cumulative usage data to forecast future needs

* Identify peak usage periods

* Plan for scaling based on growth trends

# MCP Servers

Source: https://docs.prompteus.com/features/mcp-servers

Connect remote MCP Servers to access Resources and Tools in your Neurons.

MCP Servers, and specifically the use of remote MCP servers, are nascent

technology subject to rapid and frequent change.

## Introduction

[Model Context Protocol (MCP)](https://modelcontextprotocol.io/introduction) is an open protocol that standardizes how applications provide context to LLMs.

The MCP Servers feature in Prompteus allows users to import and configure remotely hosted MCP Servers over the [Streamable HTTP transport](https://modelcontextprotocol.io/specification/2025-03-26/basic/transports#streamable-http) to access and use [Resources](https://modelcontextprotocol.io/docs/concepts/resources) and [Tools](https://modelcontextprotocol.io/docs/concepts/tools) in your Neurons.

Specification around remote MCP Servers are [still in

development](https://modelcontextprotocol.io/quickstart/server#why-claude-for-desktop-and-not-claude-ai).

In the MCP [General architecture](https://modelcontextprotocol.io/introduction#general-architecture), Prompteus is an MCP Host, and Neurons (and more specifically, the Call AI Model node) within Prompteus are MCP Clients.

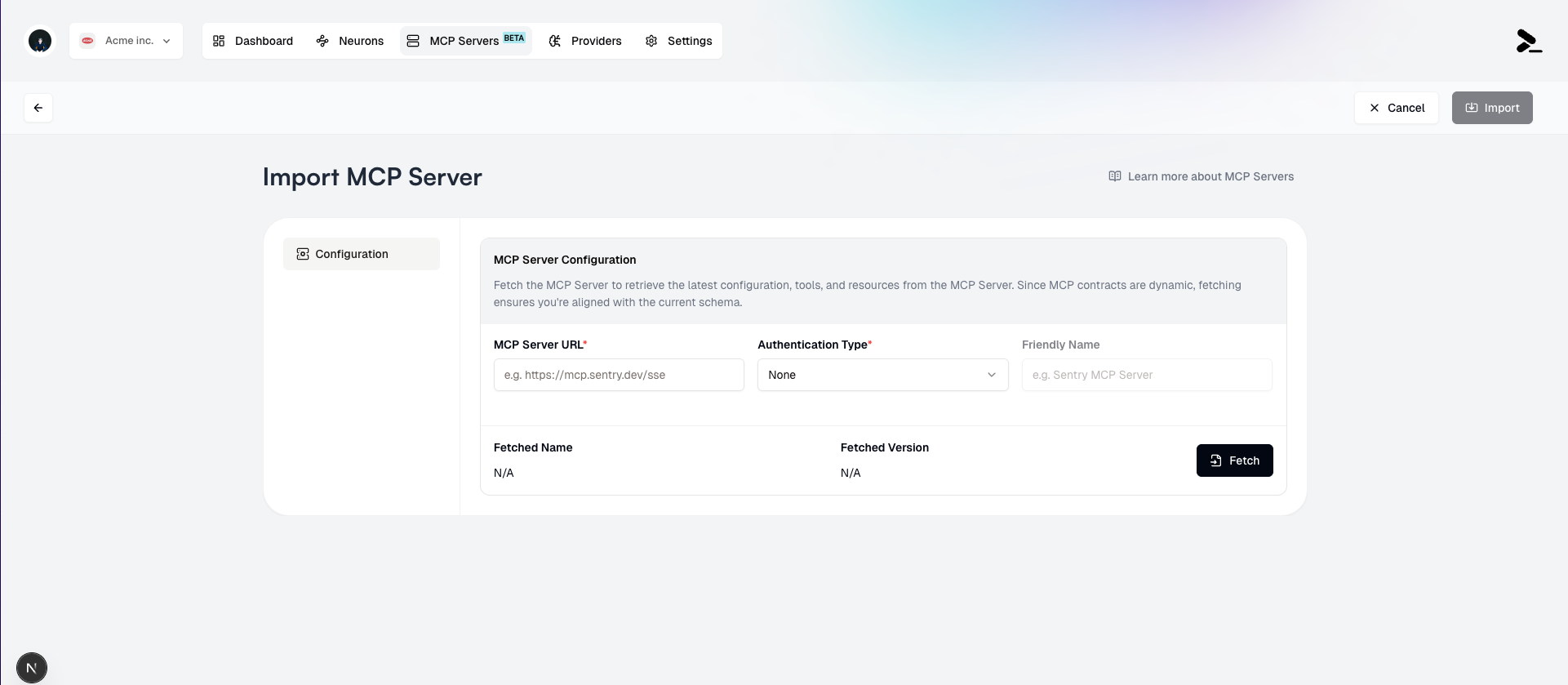

## Importing an MCP Server

To import an MCP Server in Prompteus:

1. Navigate to the MCP Servers page, then click the **Import MCP Server** button.

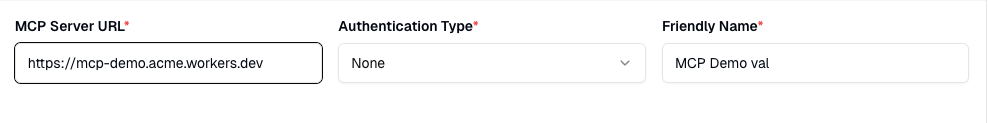

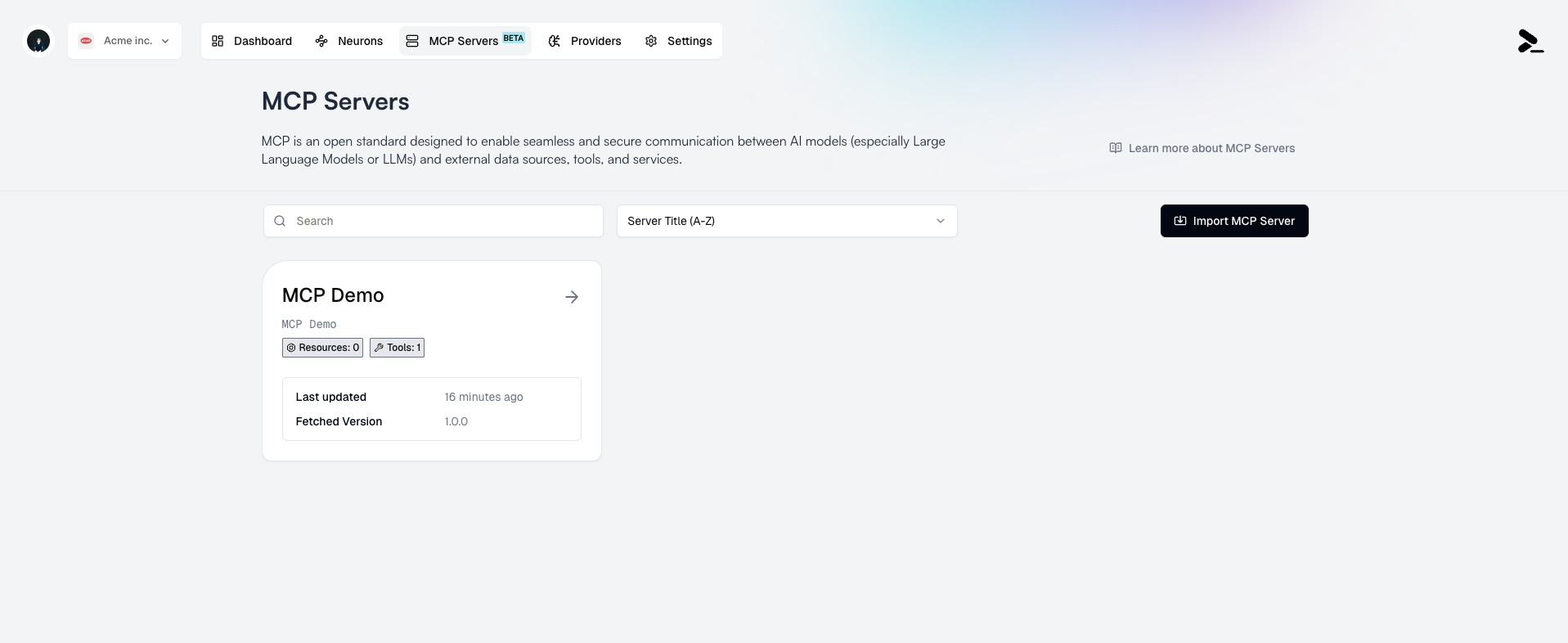

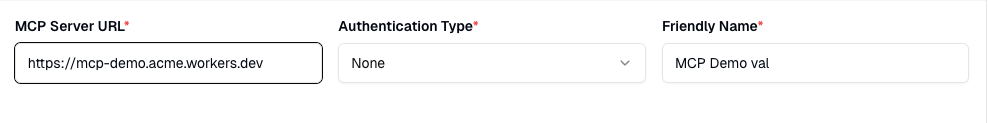

2. Enter in the required fields:

* **MCP Server URL**: The URL of the MCP Server you want to import.

* **Authentication Type**: The authentication type for the MCP Server (default is None).

2. Enter in the required fields:

* **MCP Server URL**: The URL of the MCP Server you want to import.

* **Authentication Type**: The authentication type for the MCP Server (default is None).

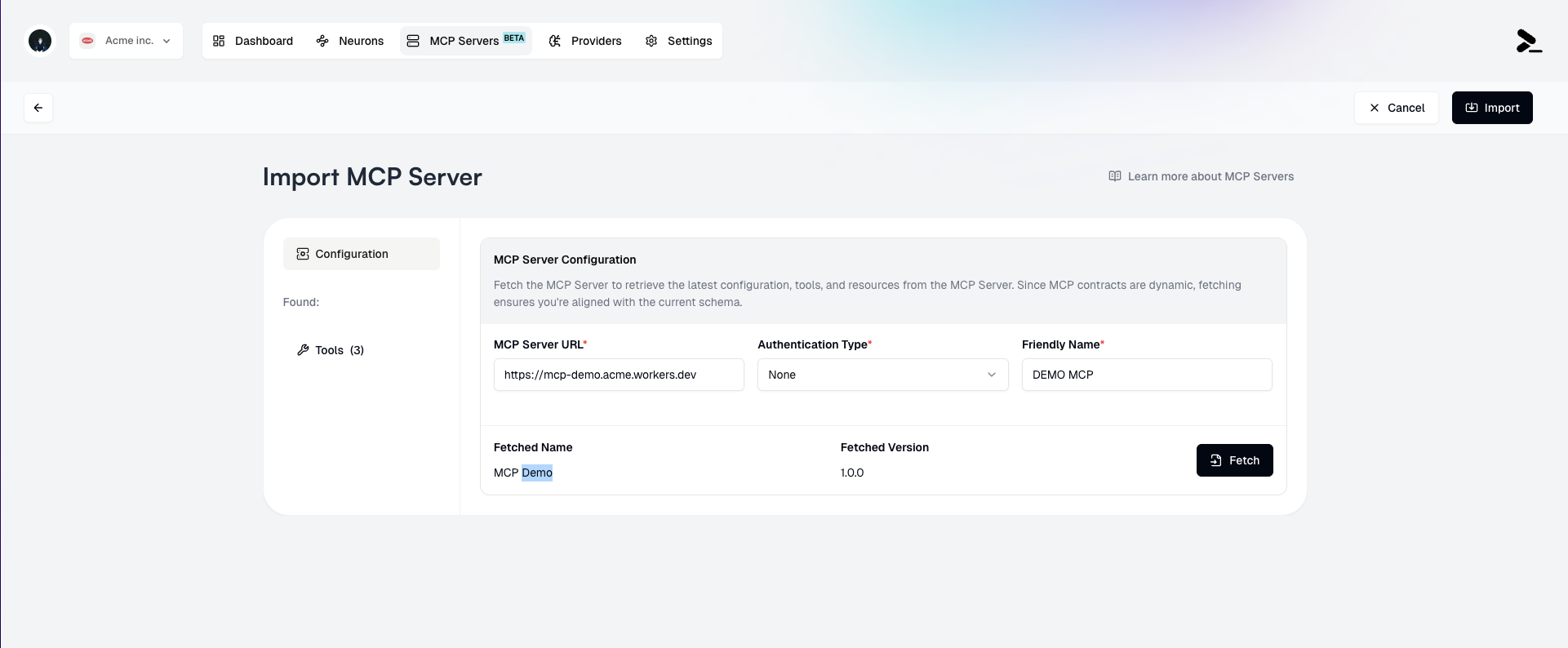

3. Click the **Fetch** button to validate the URL and initialize the server.

3. Click the **Fetch** button to validate the URL and initialize the server.

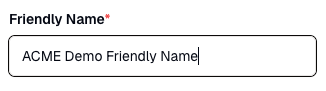

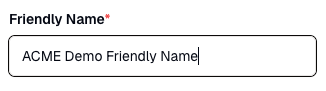

4. Add a **Friendly Name** for the MCP Server if desired. This is optional, but may be useful for distinguishing between multiple MCP Servers that have different subsets of **Tools** and **Resources** selected.

4. Add a **Friendly Name** for the MCP Server if desired. This is optional, but may be useful for distinguishing between multiple MCP Servers that have different subsets of **Tools** and **Resources** selected.

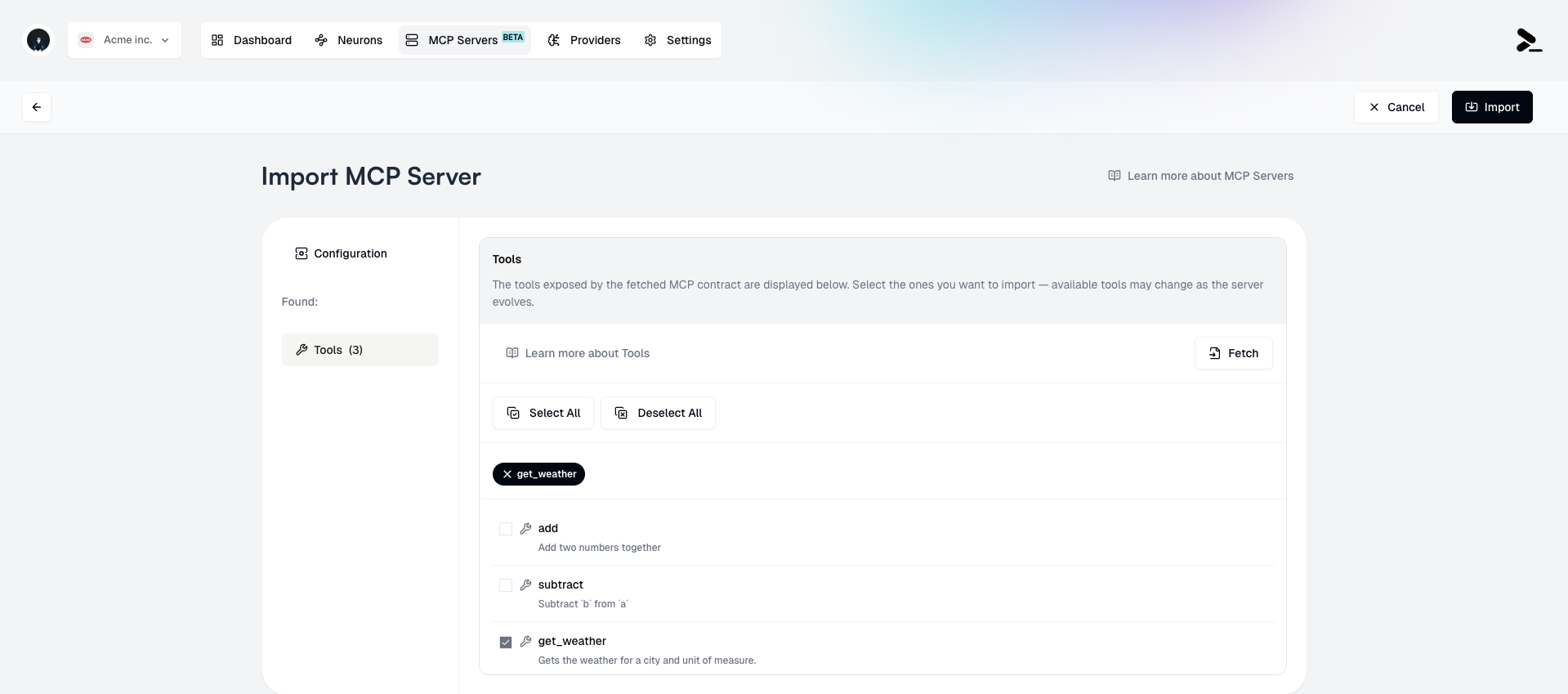

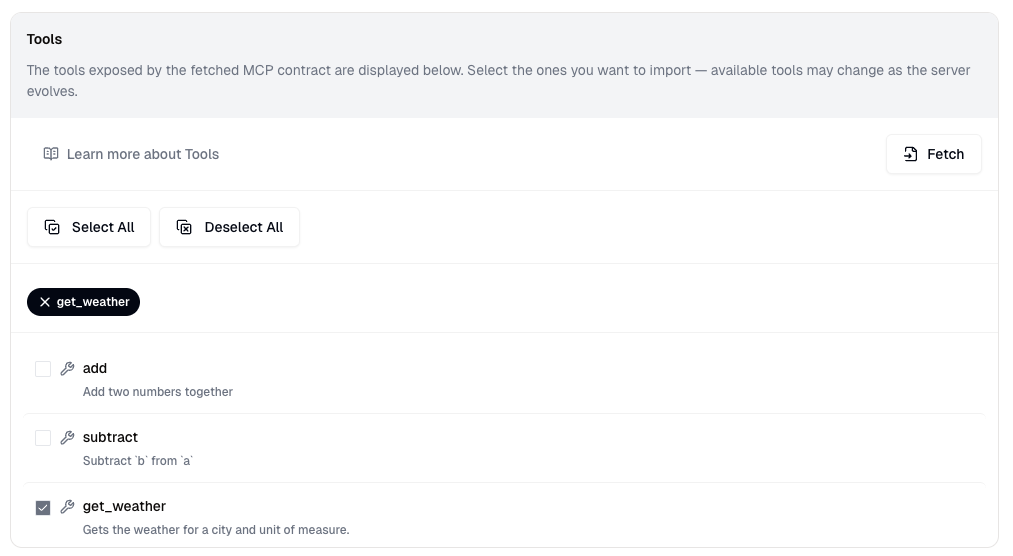

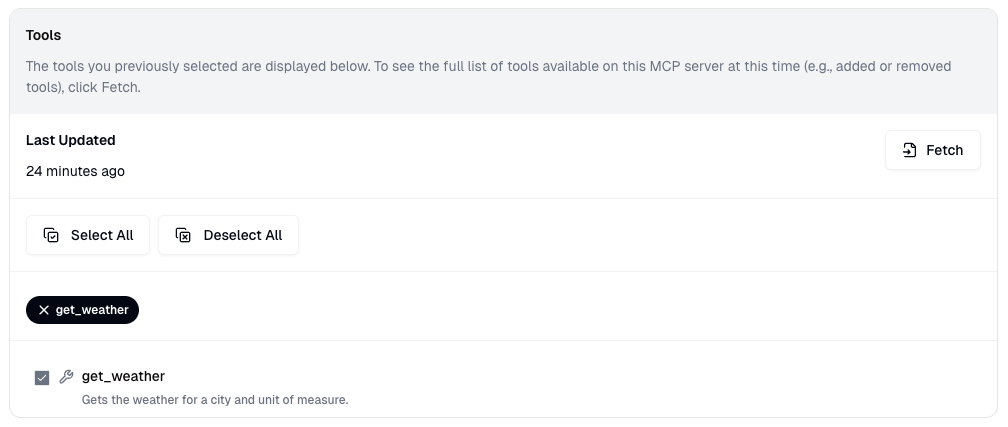

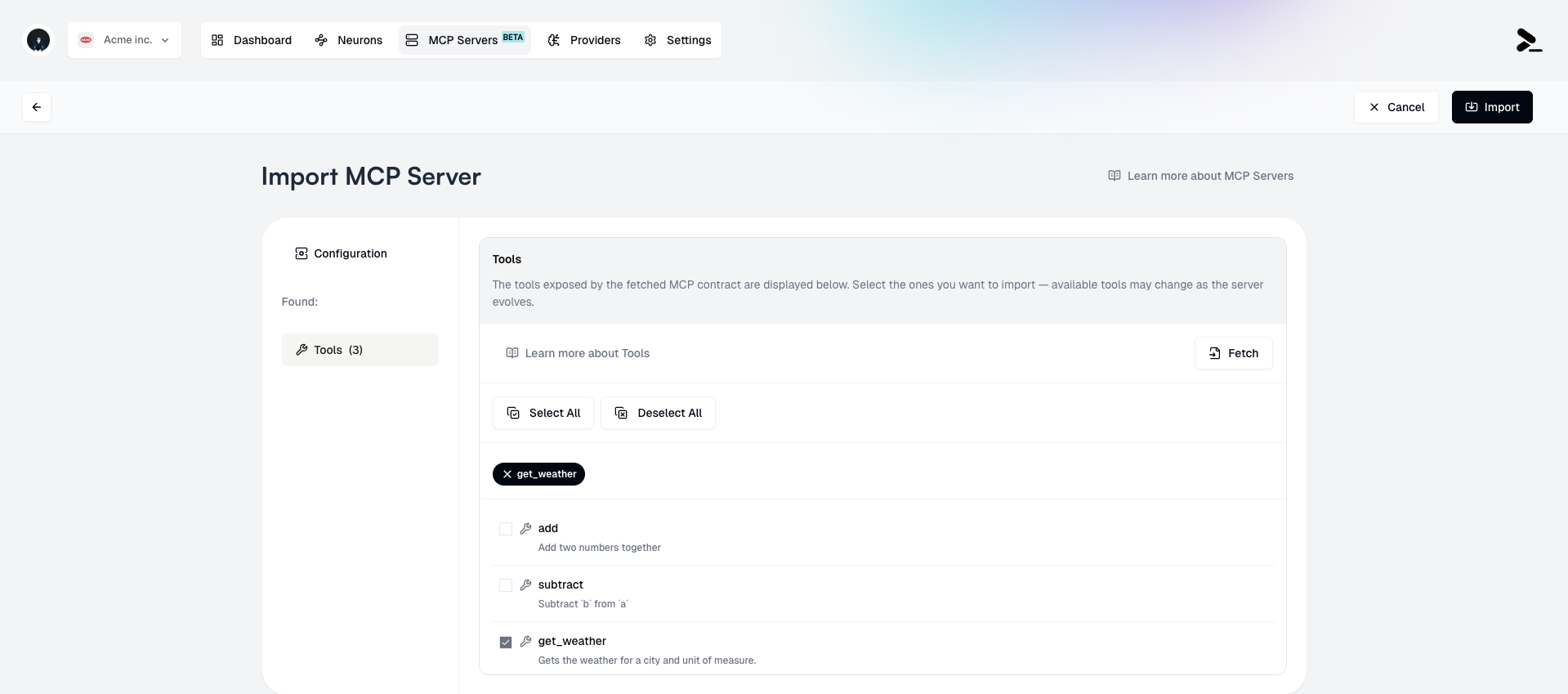

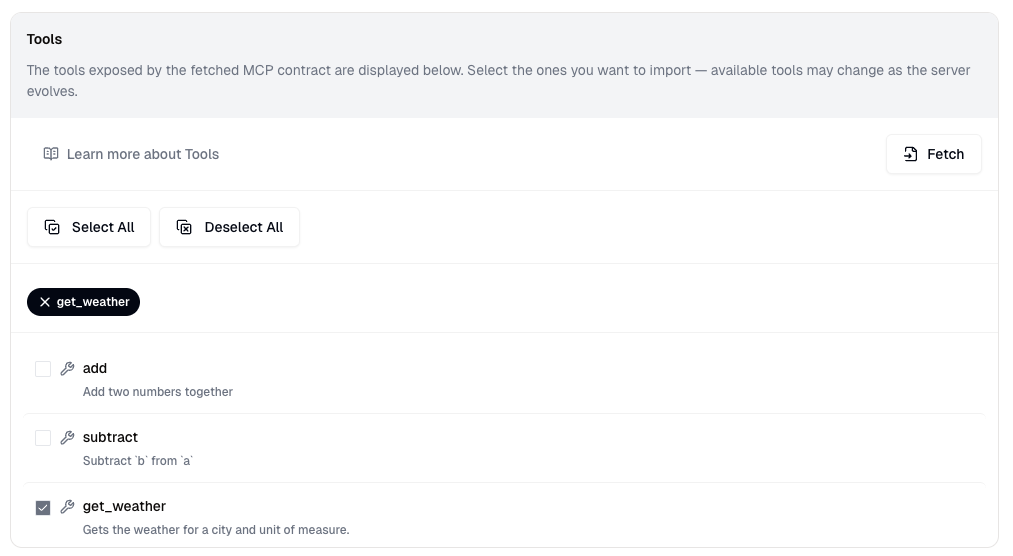

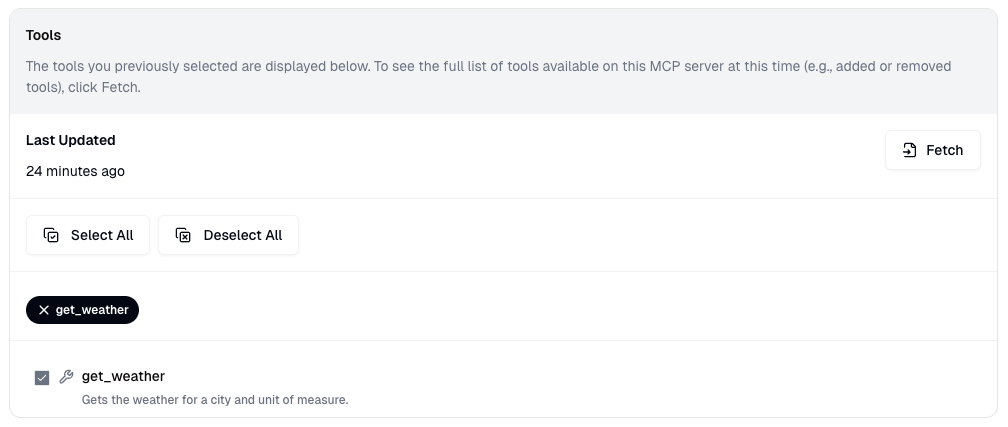

5. If the server is successfully initialized, you will see a list of available **Tools** and **Resources** for selection.

5. If the server is successfully initialized, you will see a list of available **Tools** and **Resources** for selection.

6. Select the **Tools** and **Resources** you want to import from the MCP Server.

Users may import the same MCP Server multiple times, with different subsets of

**Tools** and **Resources** selected.

6. Select the **Tools** and **Resources** you want to import from the MCP Server.

Users may import the same MCP Server multiple times, with different subsets of

**Tools** and **Resources** selected.

7. Click the **Import** button to finish the import.

7. Click the **Import** button to finish the import.

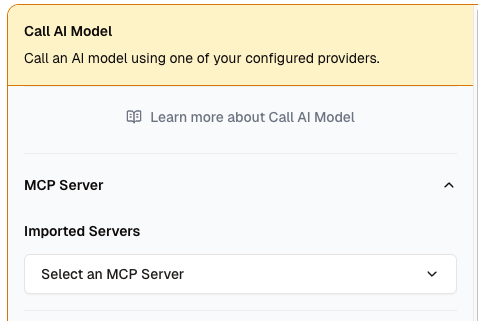

## Using an MCP Server in a Neuron workflow

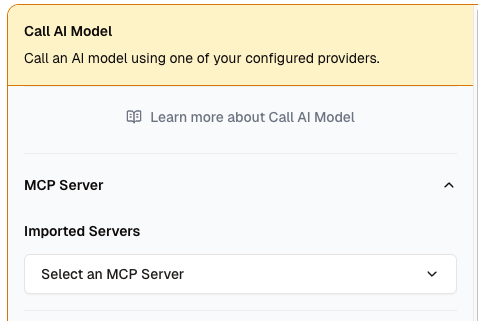

**MCP Servers** are accessed from the [Call AI Model](/neurons/editor/nodes/call-ai-model) node in the Neuron workflow.

To use an MCP Server's tools or resources in a Neuron workflow:

1. Navigate to the [editor](/neurons/editor/visual-editor) of a Neuron.

2. Add a [Call AI Model](/neurons/editor/nodes/call-ai-model) node to the workflow, if one doesn't already exist.

3. In the [Call AI Model](/neurons/editor/nodes/call-ai-model) node's settings, select one or more MCP Servers from the **Select an MCP Server** dropdown.

If you have multiple MCP Servers imported, you can select multiple MCP Servers

to use in a single Neuron workflow. Setting clear and descriptive **Friendly

Names** for MCP Servers can help you distinguish between them.

## Using an MCP Server in a Neuron workflow

**MCP Servers** are accessed from the [Call AI Model](/neurons/editor/nodes/call-ai-model) node in the Neuron workflow.

To use an MCP Server's tools or resources in a Neuron workflow:

1. Navigate to the [editor](/neurons/editor/visual-editor) of a Neuron.

2. Add a [Call AI Model](/neurons/editor/nodes/call-ai-model) node to the workflow, if one doesn't already exist.

3. In the [Call AI Model](/neurons/editor/nodes/call-ai-model) node's settings, select one or more MCP Servers from the **Select an MCP Server** dropdown.

If you have multiple MCP Servers imported, you can select multiple MCP Servers

to use in a single Neuron workflow. Setting clear and descriptive **Friendly

Names** for MCP Servers can help you distinguish between them.

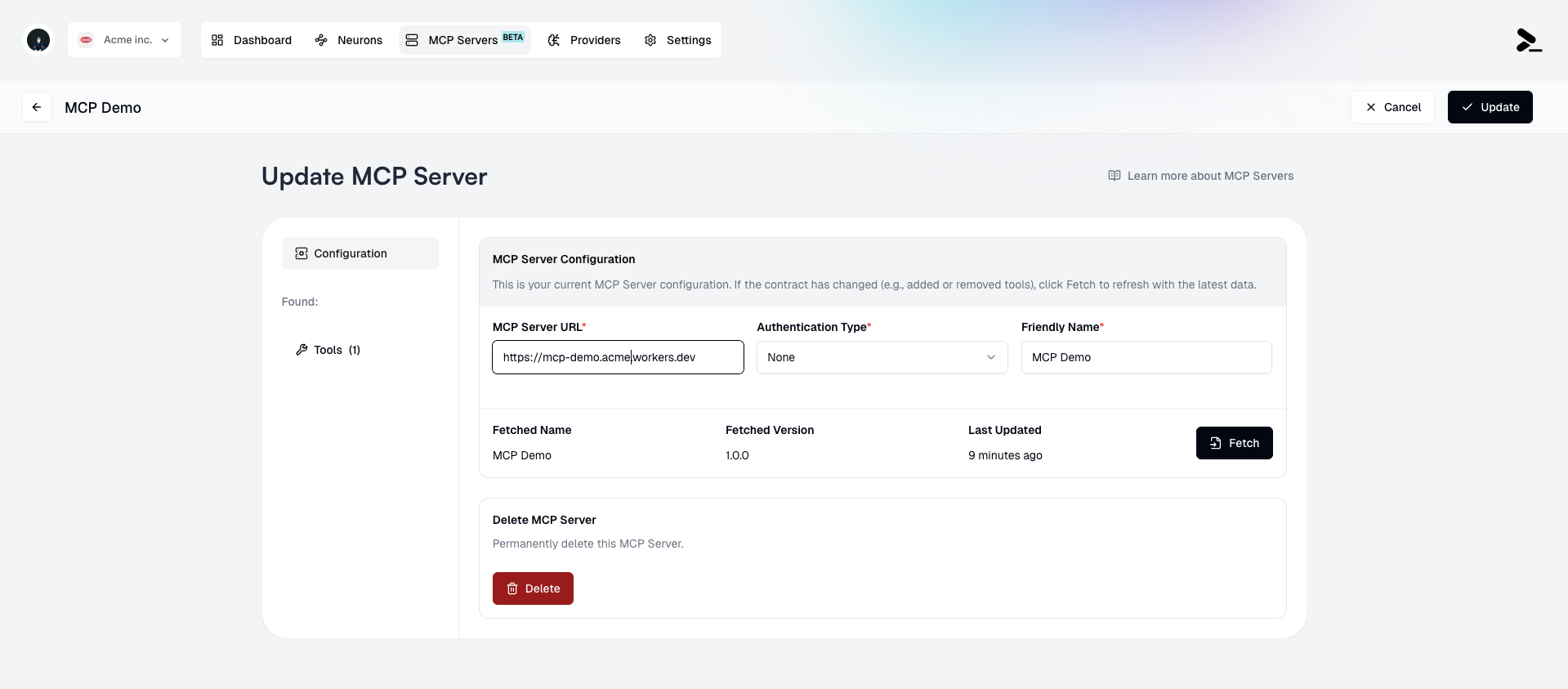

## Modifying or deleting an existing MCP Server

### Editing an MCP Server's Configuration

To make changes to an existing MCP Server:

1. Select it from the list of MCP Servers by clicking it's card.

## Modifying or deleting an existing MCP Server

### Editing an MCP Server's Configuration

To make changes to an existing MCP Server:

1. Select it from the list of MCP Servers by clicking it's card.

2. Make changes such as:

* Update the **MCP Server URL** (requires revalidation of the server)

* Update the **Authentication Type** (requires revalidation of the server)

* Update the **Friendly Name**

* Update the selected **Tools** and **Resources**

2. Make changes such as:

* Update the **MCP Server URL** (requires revalidation of the server)

* Update the **Authentication Type** (requires revalidation of the server)

* Update the **Friendly Name**

* Update the selected **Tools** and **Resources**

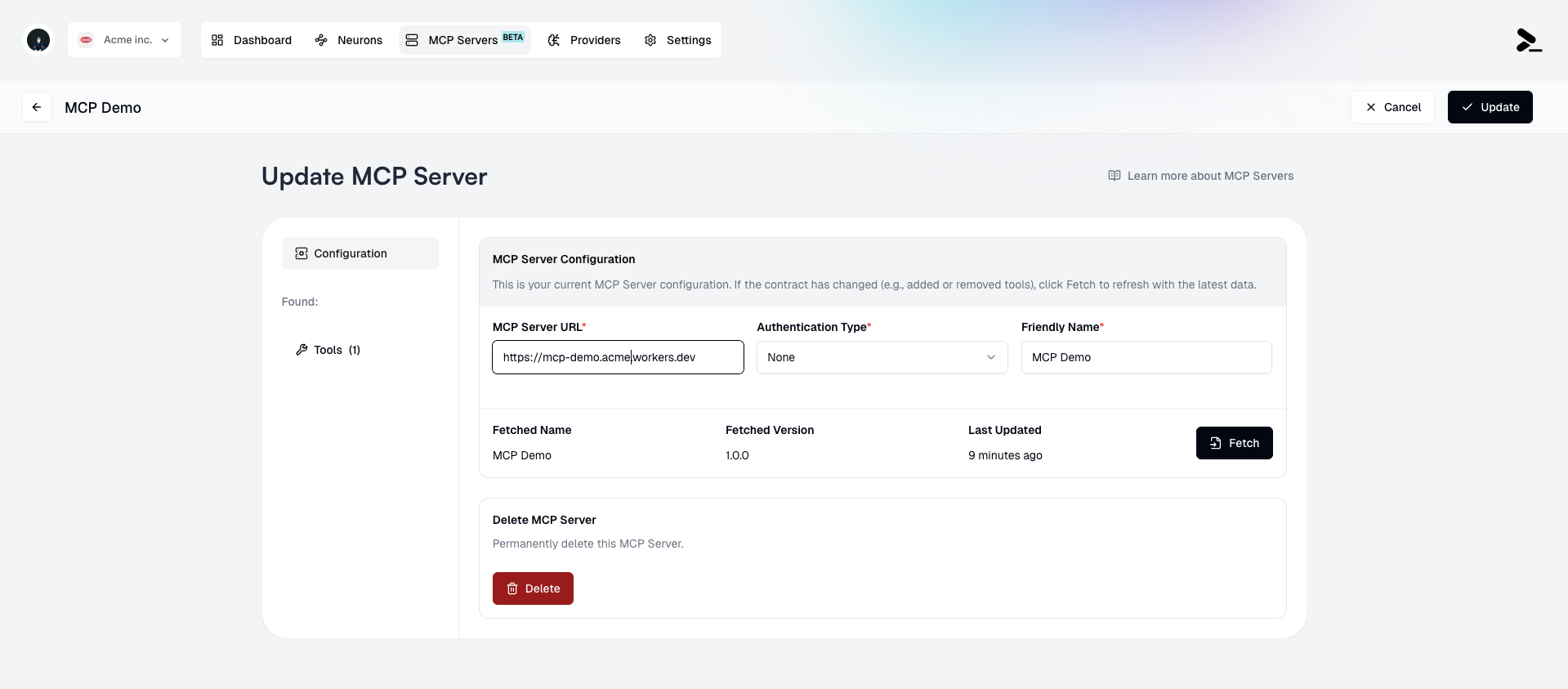

3. Click the **Update** button to save the changes.

3. Click the **Update** button to save the changes.

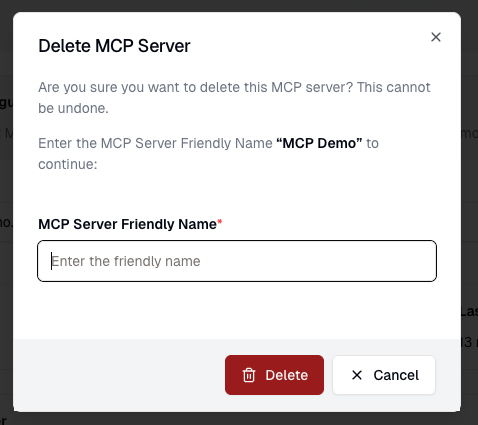

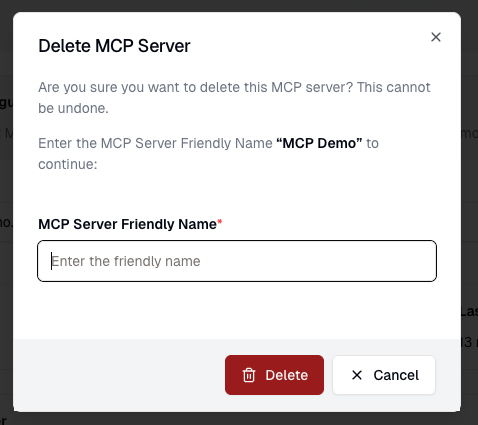

### Deleting an MCP Server

To delete an MCP Server:

1. Select it from the list of MCP Servers by clicking it's card.

### Deleting an MCP Server

To delete an MCP Server:

1. Select it from the list of MCP Servers by clicking it's card.

2. Click the **Delete** button to delete the MCP Server.

2. Click the **Delete** button to delete the MCP Server.

3. Confirm the deletion.

MCP Servers cannot be deleted if they are used in any Neuron workflows. A

warning will be displayed if this is the case.

3. Confirm the deletion.

MCP Servers cannot be deleted if they are used in any Neuron workflows. A

warning will be displayed if this is the case.

## Limitations

* Prompteus only supports remote MCP Servers configured to use the [Streamable HTTP transport](https://modelcontextprotocol.io/specification/2025-03-26/basic/transports#streamable-http).

* MCP Servers in Prompteus currently do not support importing and using{" "}

[Prompts](https://modelcontextprotocol.io/docs/concepts/prompts) within

Neurons.

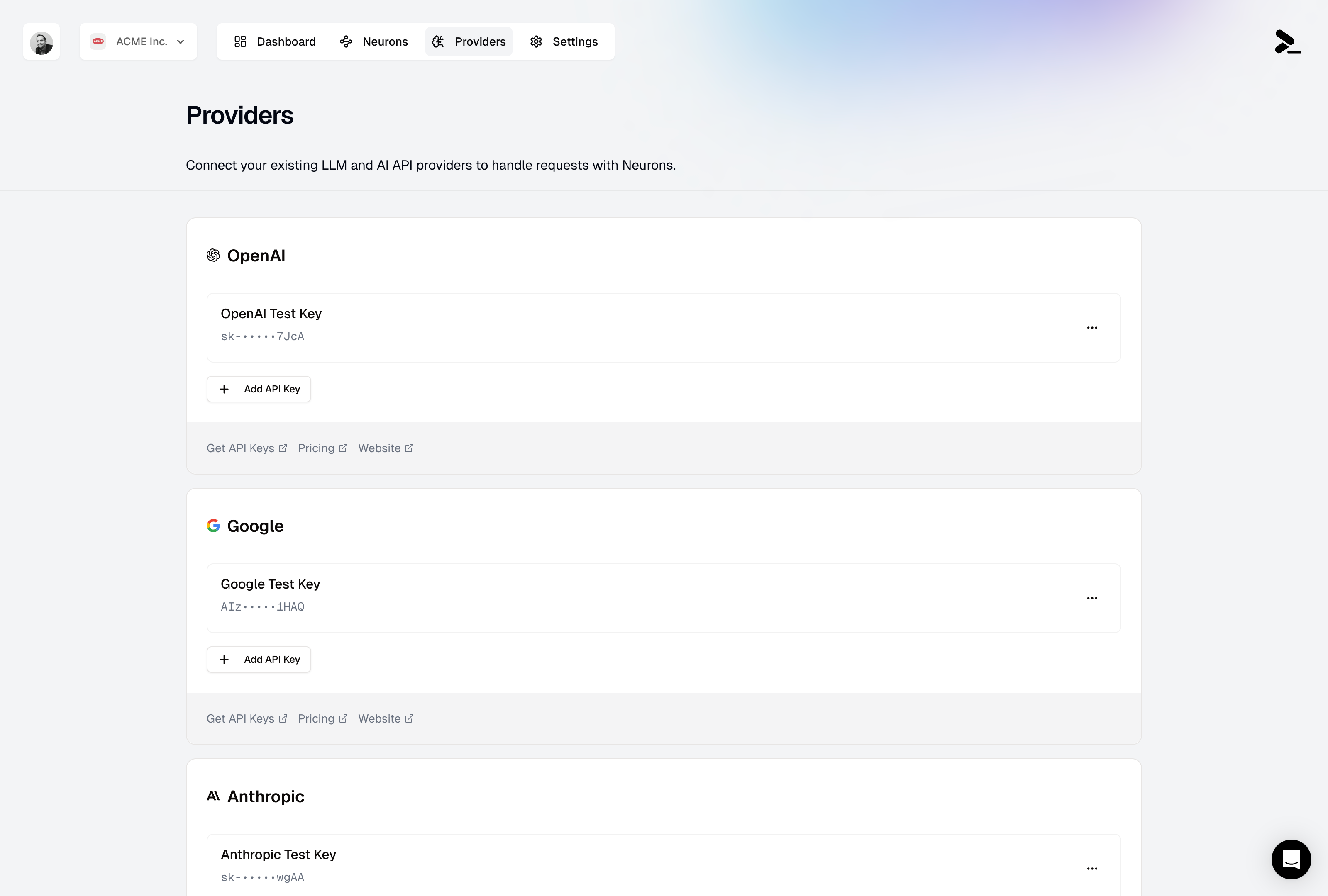

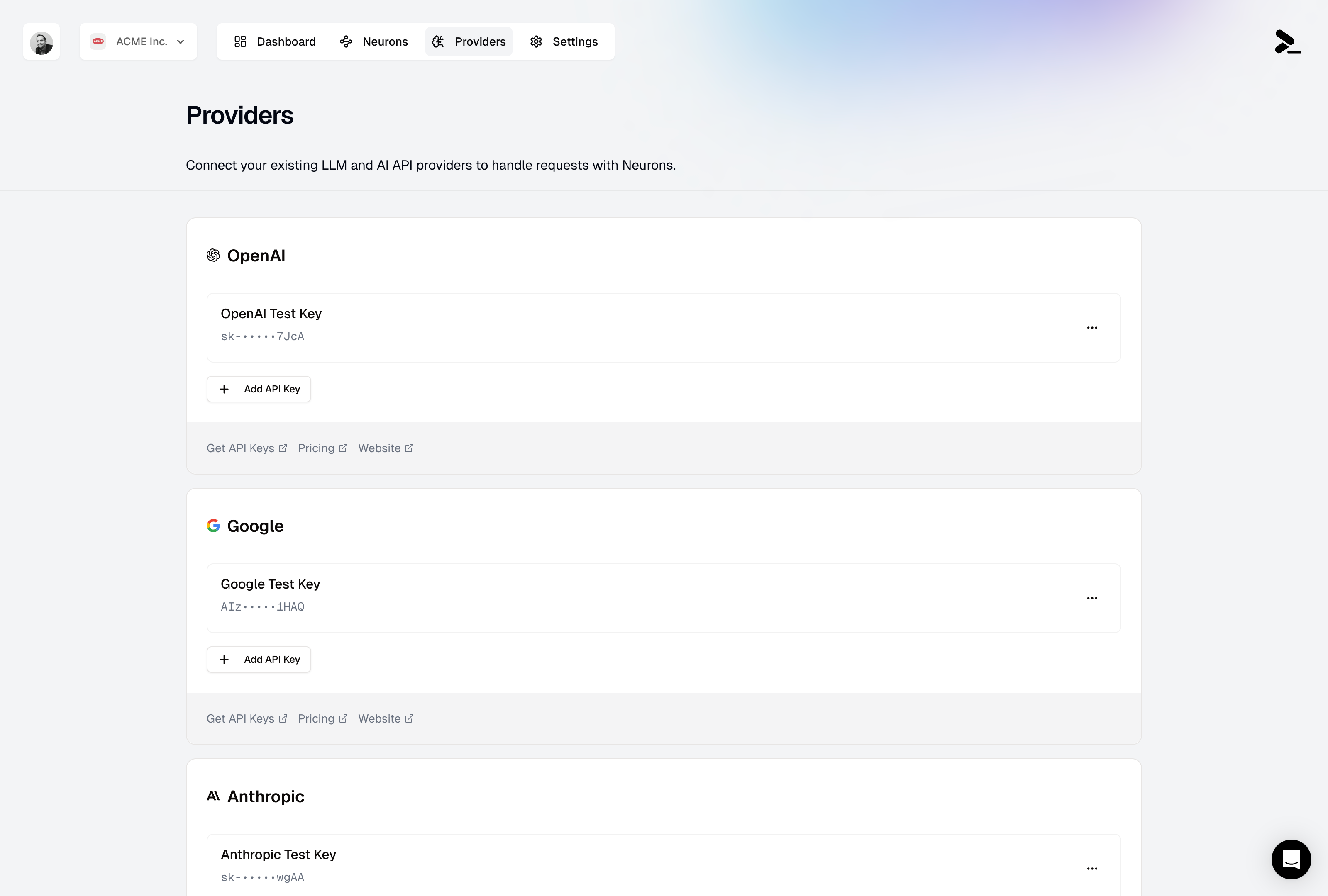

# Providers

Source: https://docs.prompteus.com/features/providers

Connect your AI provider accounts by managing API keys for OpenAI, Google, Anthropic, Cohere, and Mistral.

The Providers page allows you to manage API keys for various AI providers that your Neurons can use. You can add multiple API keys for each provider, making it easy to manage different projects, environments, or billing accounts.

## Provider Management

## Limitations

* Prompteus only supports remote MCP Servers configured to use the [Streamable HTTP transport](https://modelcontextprotocol.io/specification/2025-03-26/basic/transports#streamable-http).

* MCP Servers in Prompteus currently do not support importing and using{" "}

[Prompts](https://modelcontextprotocol.io/docs/concepts/prompts) within

Neurons.

# Providers

Source: https://docs.prompteus.com/features/providers

Connect your AI provider accounts by managing API keys for OpenAI, Google, Anthropic, Cohere, and Mistral.

The Providers page allows you to manage API keys for various AI providers that your Neurons can use. You can add multiple API keys for each provider, making it easy to manage different projects, environments, or billing accounts.

## Provider Management

### Supported Providers

Prompteus currently supports the following AI providers in the [Call AI Model](/neurons/editor/nodes/call-ai-model) node:

* OpenAI

* Google - Generative AI

* Anthropic

* Cohere

* Mistral

Support for more providers is coming soon, including custom AI API endpoints. [Contact us](mailto:support@prompteus.com) if you need a specific provider or are interested in beta-testing a new provider.

### Managing API Keys

For each provider, you can:

* Add multiple API keys

* Give each key a friendly name

* Update existing keys

* Delete keys when they're no longer needed

When adding a new API key, you'll need to provide:

1. A friendly name (e.g., "Production API Key", "Testing Key")

2. The actual API key from your provider

### Multiple Keys Per Provider

You can add as many API keys as you want for each provider. This flexibility enables:

* Separate keys for development and production environments

* Different keys for various projects or teams

* Billing management across multiple accounts

* Backup keys for redundancy

Never share your API keys or commit them to version control. If a key is compromised, immediately rotate it at your provider and update it in Prompteus.

## Related Resources

* [Dashboard](/features/dashboard) - Monitor usage and costs

* [Rate Limiting](/neurons/settings/rate-limiting) - Control API usage

* [Access Control](/neurons/settings/access-control) - Manage Neuron access

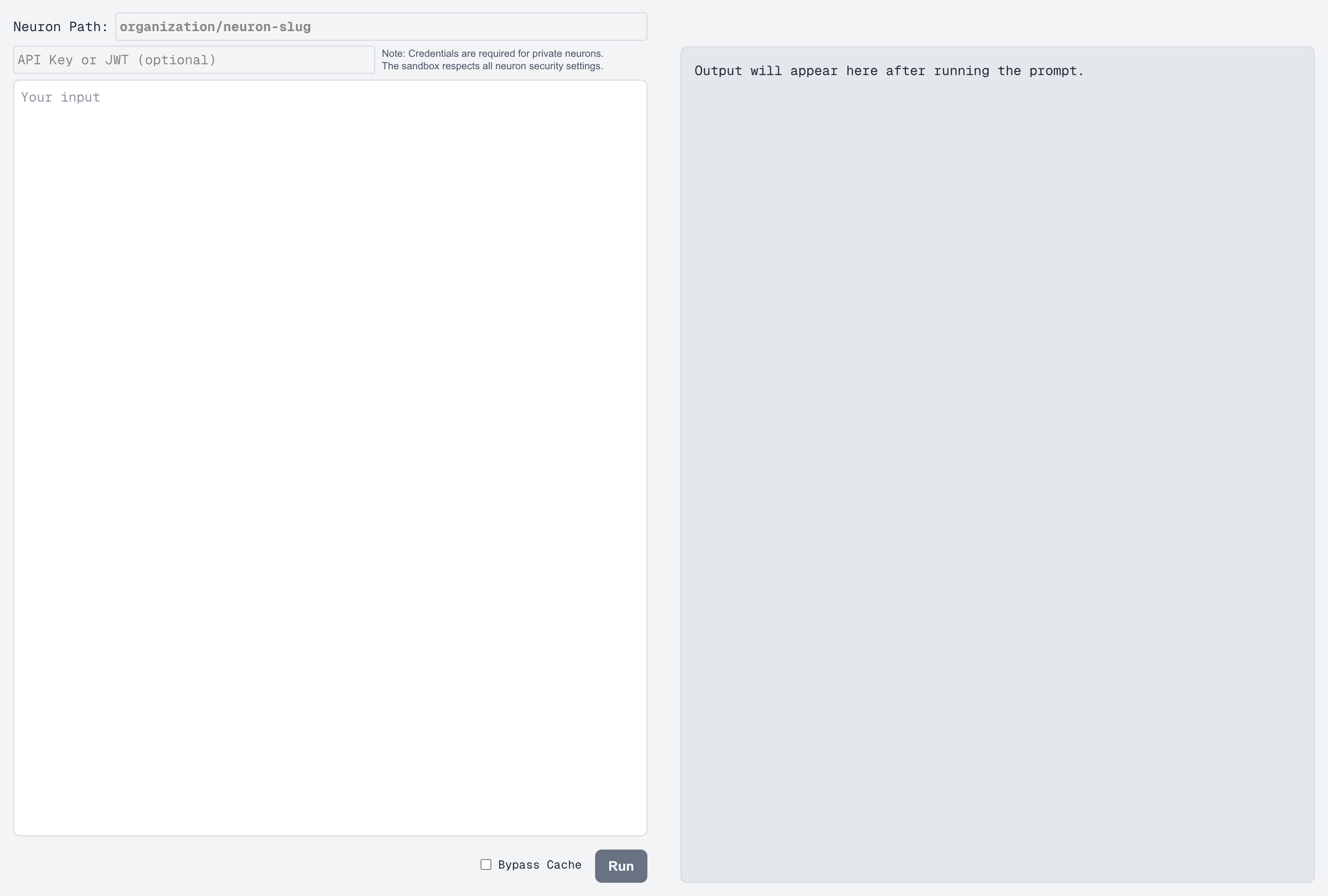

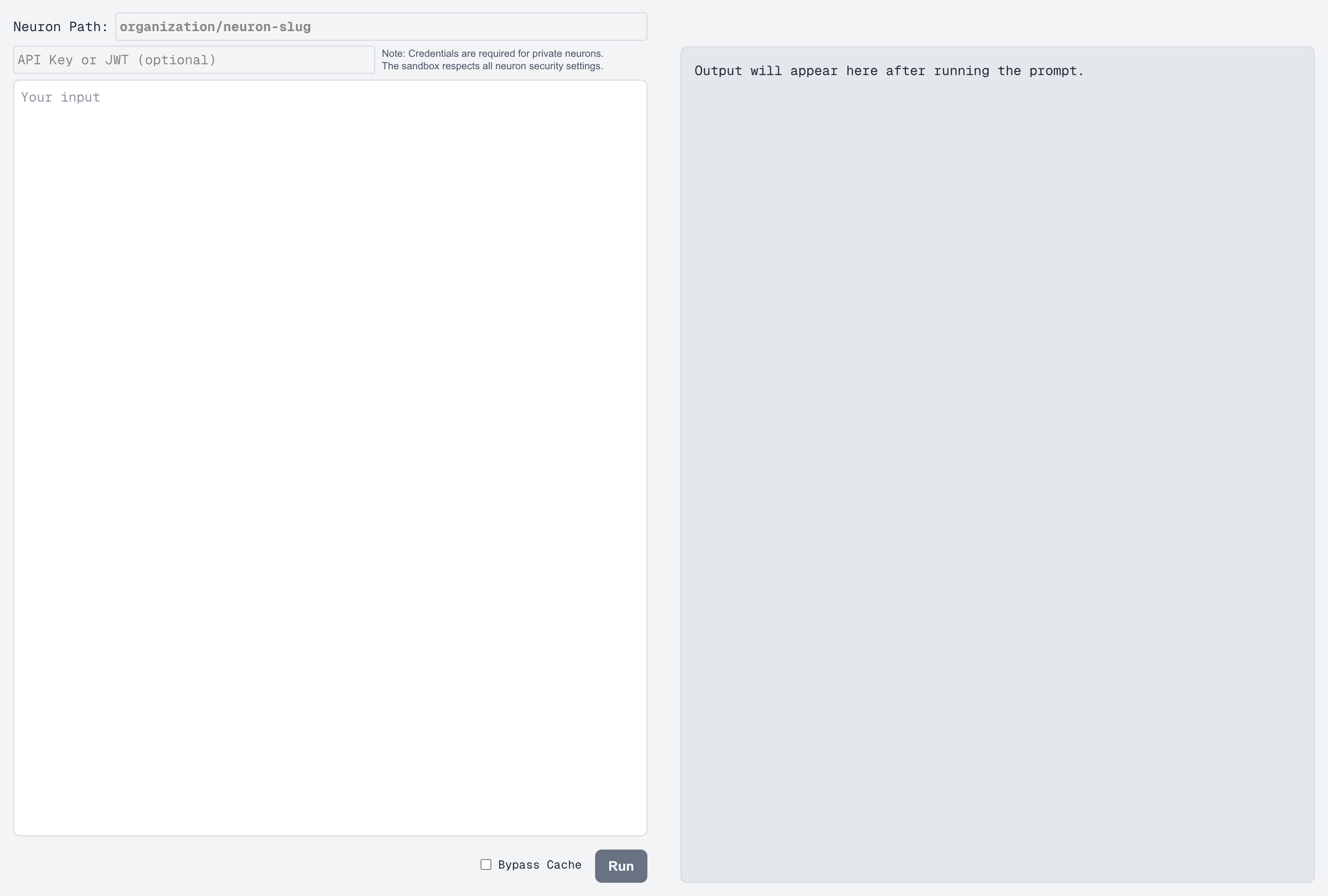

# Sandbox

Source: https://docs.prompteus.com/features/sandbox

Test and interact with your Neurons using our open-source Sandbox application.

The Sandbox is a lightweight web application that allows you to quickly test and interact with your Neurons. Available at [sandbox.prompteus.com](https://sandbox.prompteus.com), it provides a simple interface for making API calls to any Neuron endpoint.

## Features

* **Interactive Testing**: Enter your Neuron path and input, then see the results in real-time

* **Authentication Support**: Securely provide API keys or JWT tokens for protected Neurons

* **Client-Side Processing**: All API calls are processed directly in your browser

* **Cache Control**: Easily bypass cache for testing with fresh responses

* **Open Source**: The entire application is [available on GitHub](https://github.com/prompteus-ai/sandbox)

## Using the Sandbox

### Supported Providers

Prompteus currently supports the following AI providers in the [Call AI Model](/neurons/editor/nodes/call-ai-model) node:

* OpenAI

* Google - Generative AI

* Anthropic

* Cohere

* Mistral

Support for more providers is coming soon, including custom AI API endpoints. [Contact us](mailto:support@prompteus.com) if you need a specific provider or are interested in beta-testing a new provider.

### Managing API Keys

For each provider, you can:

* Add multiple API keys

* Give each key a friendly name

* Update existing keys

* Delete keys when they're no longer needed

When adding a new API key, you'll need to provide:

1. A friendly name (e.g., "Production API Key", "Testing Key")

2. The actual API key from your provider

### Multiple Keys Per Provider

You can add as many API keys as you want for each provider. This flexibility enables:

* Separate keys for development and production environments

* Different keys for various projects or teams

* Billing management across multiple accounts

* Backup keys for redundancy

Never share your API keys or commit them to version control. If a key is compromised, immediately rotate it at your provider and update it in Prompteus.

## Related Resources

* [Dashboard](/features/dashboard) - Monitor usage and costs

* [Rate Limiting](/neurons/settings/rate-limiting) - Control API usage

* [Access Control](/neurons/settings/access-control) - Manage Neuron access

# Sandbox

Source: https://docs.prompteus.com/features/sandbox

Test and interact with your Neurons using our open-source Sandbox application.

The Sandbox is a lightweight web application that allows you to quickly test and interact with your Neurons. Available at [sandbox.prompteus.com](https://sandbox.prompteus.com), it provides a simple interface for making API calls to any Neuron endpoint.

## Features

* **Interactive Testing**: Enter your Neuron path and input, then see the results in real-time

* **Authentication Support**: Securely provide API keys or JWT tokens for protected Neurons

* **Client-Side Processing**: All API calls are processed directly in your browser

* **Cache Control**: Easily bypass cache for testing with fresh responses

* **Open Source**: The entire application is [available on GitHub](https://github.com/prompteus-ai/sandbox)

## Using the Sandbox

1. **Neuron Path**: Enter your Neuron's path in the format `organization/neuron-slug`

2. **Authentication** (Optional): If your Neuron requires authentication, enter your API key or JWT token

3. **Input**: Type your input in the text area

4. **Cache Control**: Toggle the "Bypass Cache" option if you need fresh responses

5. **Run**: Click the "Run" button to execute the Neuron and see the results

The Sandbox respects all Neuron security settings. If a Neuron requires authentication, you'll need to provide valid credentials to access it.

## Contributing

The Sandbox is open source and available on [GitHub](https://github.com/prompteus-ai/sandbox). We welcome contributions from the community, whether it's bug fixes, feature improvements, or documentation updates.

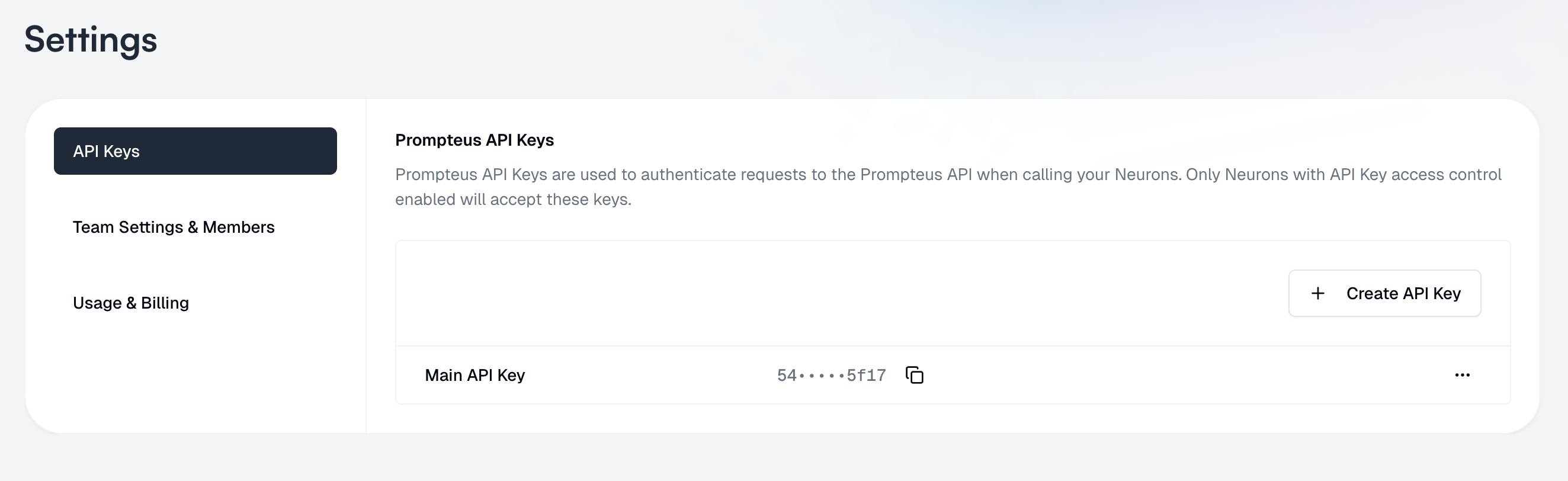

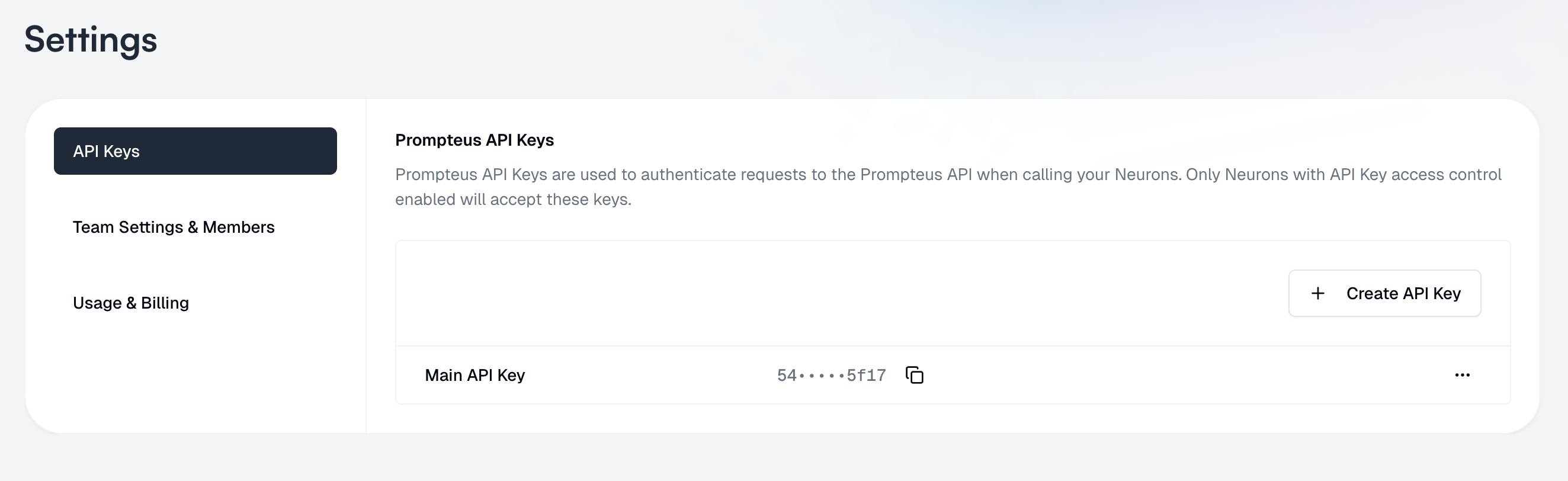

# Prompteus API Keys

Source: https://docs.prompteus.com/features/settings/api-keys

Learn how to manage your Prompteus API keys to authenticate requests to your Neurons.

Prompteus API Keys are used to authenticate requests to the Prompteus API when calling your Neurons. Only Neurons with API Key access control enabled will accept these keys.

## Managing API Keys

1. **Neuron Path**: Enter your Neuron's path in the format `organization/neuron-slug`

2. **Authentication** (Optional): If your Neuron requires authentication, enter your API key or JWT token

3. **Input**: Type your input in the text area

4. **Cache Control**: Toggle the "Bypass Cache" option if you need fresh responses

5. **Run**: Click the "Run" button to execute the Neuron and see the results

The Sandbox respects all Neuron security settings. If a Neuron requires authentication, you'll need to provide valid credentials to access it.

## Contributing

The Sandbox is open source and available on [GitHub](https://github.com/prompteus-ai/sandbox). We welcome contributions from the community, whether it's bug fixes, feature improvements, or documentation updates.

# Prompteus API Keys

Source: https://docs.prompteus.com/features/settings/api-keys

Learn how to manage your Prompteus API keys to authenticate requests to your Neurons.

Prompteus API Keys are used to authenticate requests to the Prompteus API when calling your Neurons. Only Neurons with API Key access control enabled will accept these keys.

## Managing API Keys

### Creating API Keys

1. Navigate to the Settings page

2. Select "[API Keys](https://dashboard.prompteus.com/settings/api-keys)" from the left sidebar

3. Click "Create API Key"

4. Give your key a friendly name for easy identification

You will be able to see the API key, even later. Be careful to not expose it to others, and revoke keys that are no longer needed or compromised.

### Using API Keys

To use an API key with your Neurons, you'll need to:

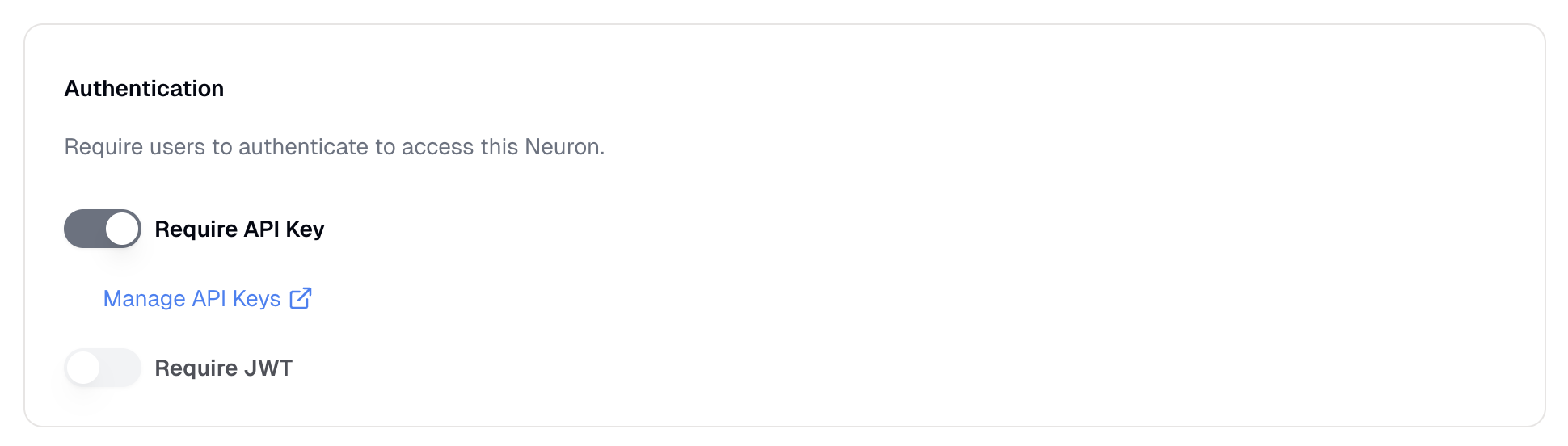

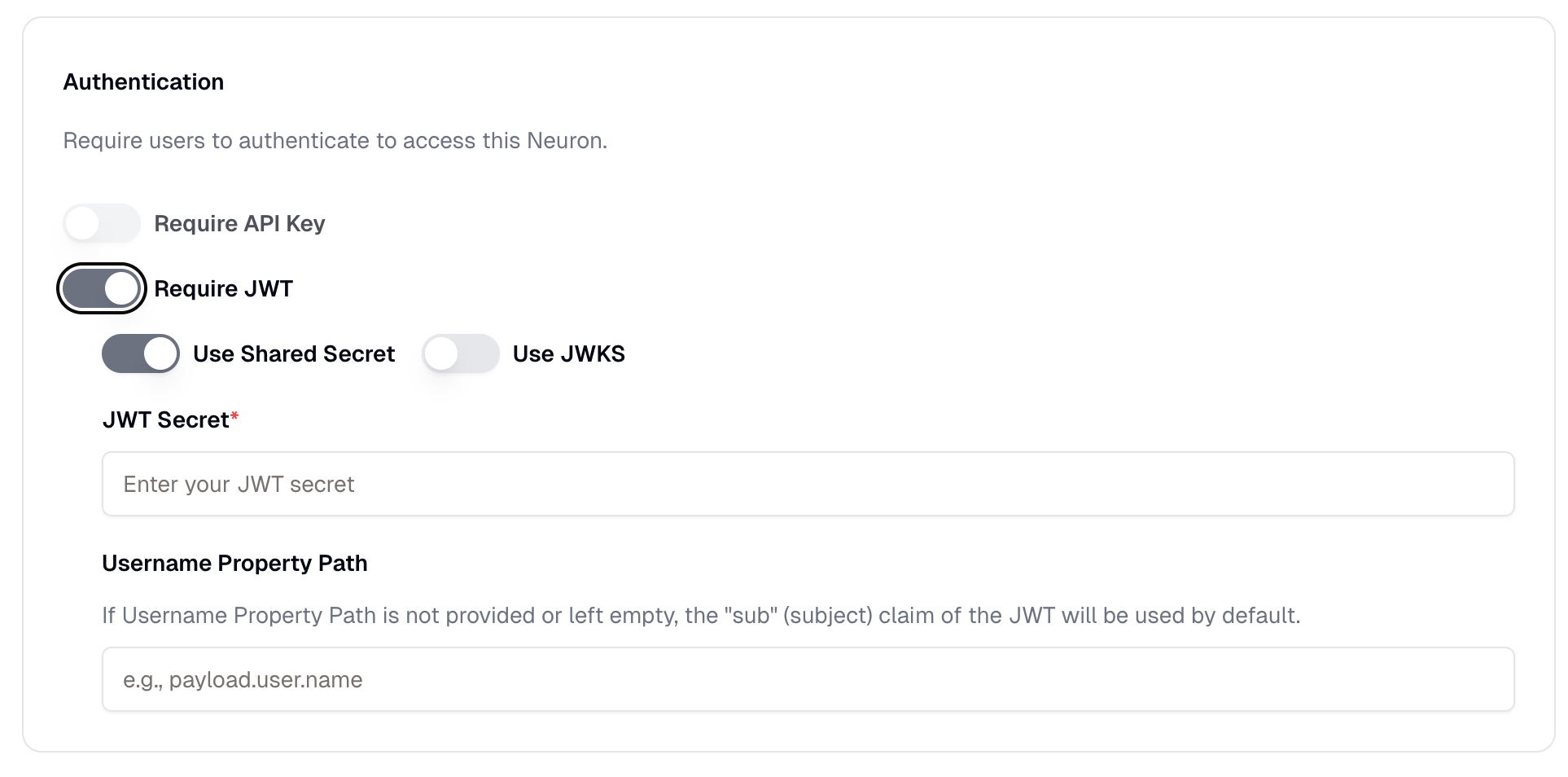

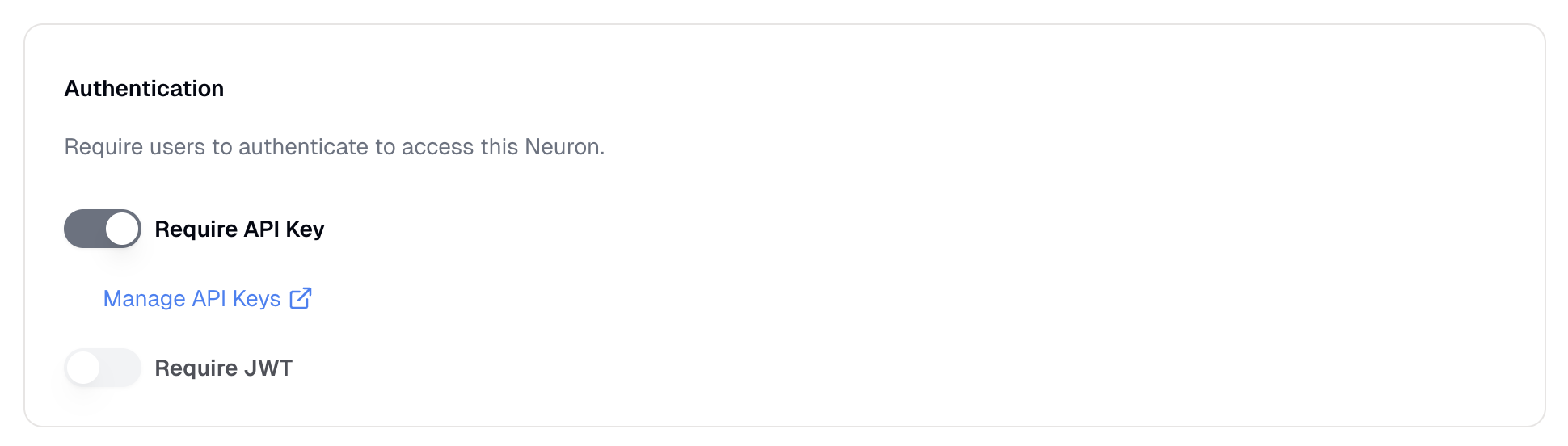

1. Enable API Key authentication in your [Neuron's access control settings](/neurons/settings/access-control#api-key-authentication)

2. Include the API key in your requests as described in the [authentication guide](/neurons/settings/access-control#using-api-keys)

### Best Practices

1. **Key Management**

* Use different keys for different environments (development, staging, production)

* Rotate keys periodically for security

* Delete unused keys

2. **Security**

* Never share API keys or commit them to version control

* Use environment variables to store keys in your applications

* Monitor key usage for unusual patterns

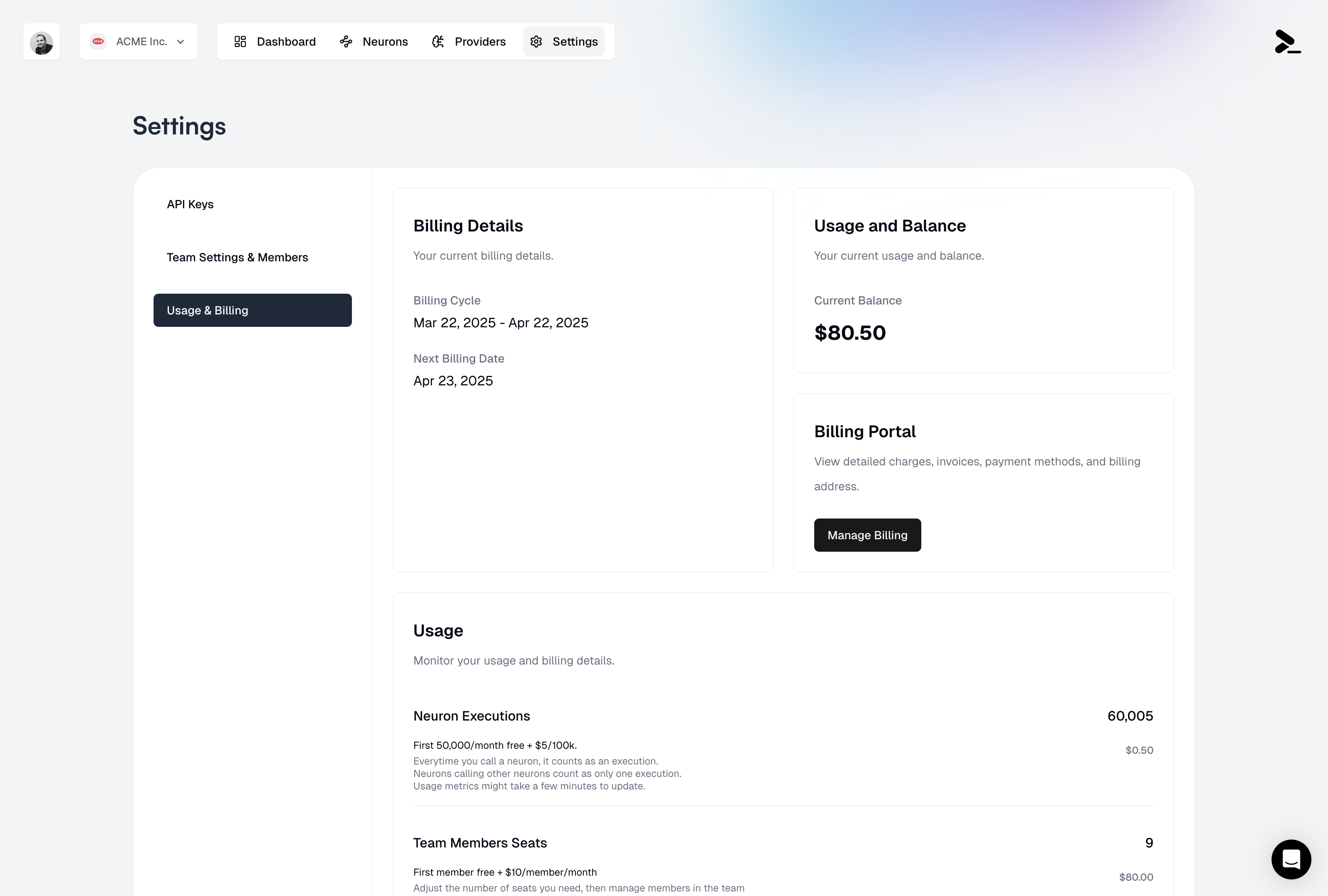

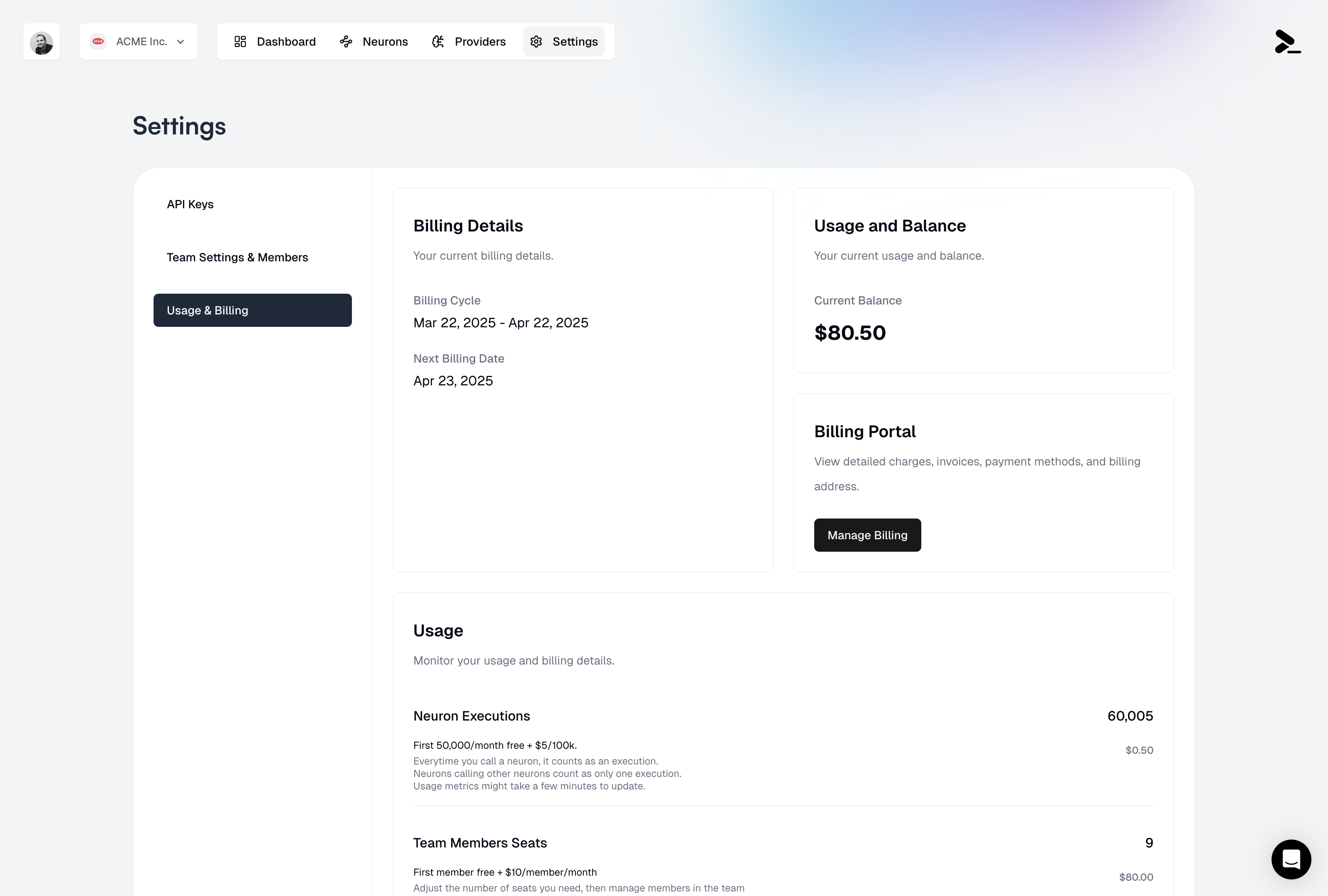

# Billing

Source: https://docs.prompteus.com/features/settings/billing

Understand Prompteus billing, manage your usage, and configure billing settings.

Prompteus uses a pay-as-you-go billing model with a generous free tier. This guide explains our billing system, pricing, and how to manage your billing settings.

## Billing Overview

### Creating API Keys

1. Navigate to the Settings page

2. Select "[API Keys](https://dashboard.prompteus.com/settings/api-keys)" from the left sidebar

3. Click "Create API Key"

4. Give your key a friendly name for easy identification

You will be able to see the API key, even later. Be careful to not expose it to others, and revoke keys that are no longer needed or compromised.

### Using API Keys

To use an API key with your Neurons, you'll need to:

1. Enable API Key authentication in your [Neuron's access control settings](/neurons/settings/access-control#api-key-authentication)

2. Include the API key in your requests as described in the [authentication guide](/neurons/settings/access-control#using-api-keys)

### Best Practices

1. **Key Management**

* Use different keys for different environments (development, staging, production)

* Rotate keys periodically for security

* Delete unused keys

2. **Security**

* Never share API keys or commit them to version control

* Use environment variables to store keys in your applications

* Monitor key usage for unusual patterns

# Billing

Source: https://docs.prompteus.com/features/settings/billing

Understand Prompteus billing, manage your usage, and configure billing settings.

Prompteus uses a pay-as-you-go billing model with a generous free tier. This guide explains our billing system, pricing, and how to manage your billing settings.

## Billing Overview

### Free Tier

The free tier provides essential features to get started with Prompteus, including:

* First 50,000 Neuron executions free per month

* First team member seat free

* Basic log retention (24 hours)

* No credit card required for free tier usage

If you stay within the free tier limits, you can use Prompteus without providing payment information. However, your account will be paused if you exceed these limits without a payment method on file.

### Pricing Structure

Our pricing is designed to scale with your needs, offering flexibility and transparency:

| Feature | Free Tier | Additional Cost | Notes |

| --------------------- | --------------- | ----------------------------------------------- | --------------------------------------------------------------------------------- |

| **Neuron Executions** | First 50k/month | \$5/100,000 additional executions | Counted per Neuron call, including internal calls |

| **Team Members** | First seat | \$10/member/month for additional seats | Billed based on active seats |

| **Log Retention** | 24h | Extended retention available at additional cost | You will need to wait at least 30 days before you can downgrade after an upgrade. |

## Usage and Balance

### Monitoring Usage

The usage dashboard provides comprehensive insights into your Prompteus account:

* Track current usage

* View billing cycle dates

* Monitor execution counts

* See team member seat usage

### Billing Portal

The billing portal is your central hub for managing all financial aspects of your Prompteus account:

* View detailed charges

* Download invoices

* Update payment methods

* Manage billing address

## Managing Settings

### Team Member Seats

Managing team member seats is straightforward through the billing interface:

1. Navigate to Usage & Billing

2. Find "Team Members Seats"

3. Click "Adjust Seats"

4. Set desired number of seats

Removing seats will not automatically remove team members, and vice versa. You'll need to manage team composition separately in [Team Settings](/features/settings/team).

### Log Retention

Configure your log retention settings to match your compliance and operational needs:

1. Find "Logs Retention Plan"

2. Click "Change Plan"

3. Select desired retention period

Changes to log retention are prorated immediately. You will need to wait at least 30 days before you can downgrade after an upgrade.

## Payment and Billing

### Credit Card Requirement

Understanding when a credit card is required helps prevent service interruptions:

* No credit card required if staying within free tier

* Credit card required when:

* Exceeding 50,000 executions/month

* Adding additional team members

* Extending log retention

If you exceed free tier limits without a payment method, your account will be paused and Neurons will fail until payment is configured.

### Billing Cycle

Our billing system operates on a monthly cycle with clear timing and requirements:

* Monthly billing cycle

* Usage resets at the start of each cycle

* Charges processed at cycle end

* Immediate payment required when exceeding free tier

# Team Settings

Source: https://docs.prompteus.com/features/settings/team

Manage your organization profile and team members in Prompteus.

[Team Settings](https://dashboard.prompteus.com/settings/team) allow you to manage your organization's profile and team members. This includes updating your organization's information, managing your team's composition, and controlling member access.

## Organization Profile

### Free Tier

The free tier provides essential features to get started with Prompteus, including:

* First 50,000 Neuron executions free per month

* First team member seat free

* Basic log retention (24 hours)

* No credit card required for free tier usage

If you stay within the free tier limits, you can use Prompteus without providing payment information. However, your account will be paused if you exceed these limits without a payment method on file.

### Pricing Structure

Our pricing is designed to scale with your needs, offering flexibility and transparency:

| Feature | Free Tier | Additional Cost | Notes |

| --------------------- | --------------- | ----------------------------------------------- | --------------------------------------------------------------------------------- |

| **Neuron Executions** | First 50k/month | \$5/100,000 additional executions | Counted per Neuron call, including internal calls |

| **Team Members** | First seat | \$10/member/month for additional seats | Billed based on active seats |

| **Log Retention** | 24h | Extended retention available at additional cost | You will need to wait at least 30 days before you can downgrade after an upgrade. |

## Usage and Balance

### Monitoring Usage

The usage dashboard provides comprehensive insights into your Prompteus account:

* Track current usage

* View billing cycle dates

* Monitor execution counts

* See team member seat usage

### Billing Portal

The billing portal is your central hub for managing all financial aspects of your Prompteus account:

* View detailed charges

* Download invoices

* Update payment methods

* Manage billing address

## Managing Settings

### Team Member Seats

Managing team member seats is straightforward through the billing interface:

1. Navigate to Usage & Billing

2. Find "Team Members Seats"

3. Click "Adjust Seats"

4. Set desired number of seats

Removing seats will not automatically remove team members, and vice versa. You'll need to manage team composition separately in [Team Settings](/features/settings/team).

### Log Retention

Configure your log retention settings to match your compliance and operational needs:

1. Find "Logs Retention Plan"

2. Click "Change Plan"

3. Select desired retention period

Changes to log retention are prorated immediately. You will need to wait at least 30 days before you can downgrade after an upgrade.

## Payment and Billing

### Credit Card Requirement

Understanding when a credit card is required helps prevent service interruptions:

* No credit card required if staying within free tier

* Credit card required when:

* Exceeding 50,000 executions/month

* Adding additional team members

* Extending log retention

If you exceed free tier limits without a payment method, your account will be paused and Neurons will fail until payment is configured.

### Billing Cycle

Our billing system operates on a monthly cycle with clear timing and requirements:

* Monthly billing cycle

* Usage resets at the start of each cycle

* Charges processed at cycle end

* Immediate payment required when exceeding free tier

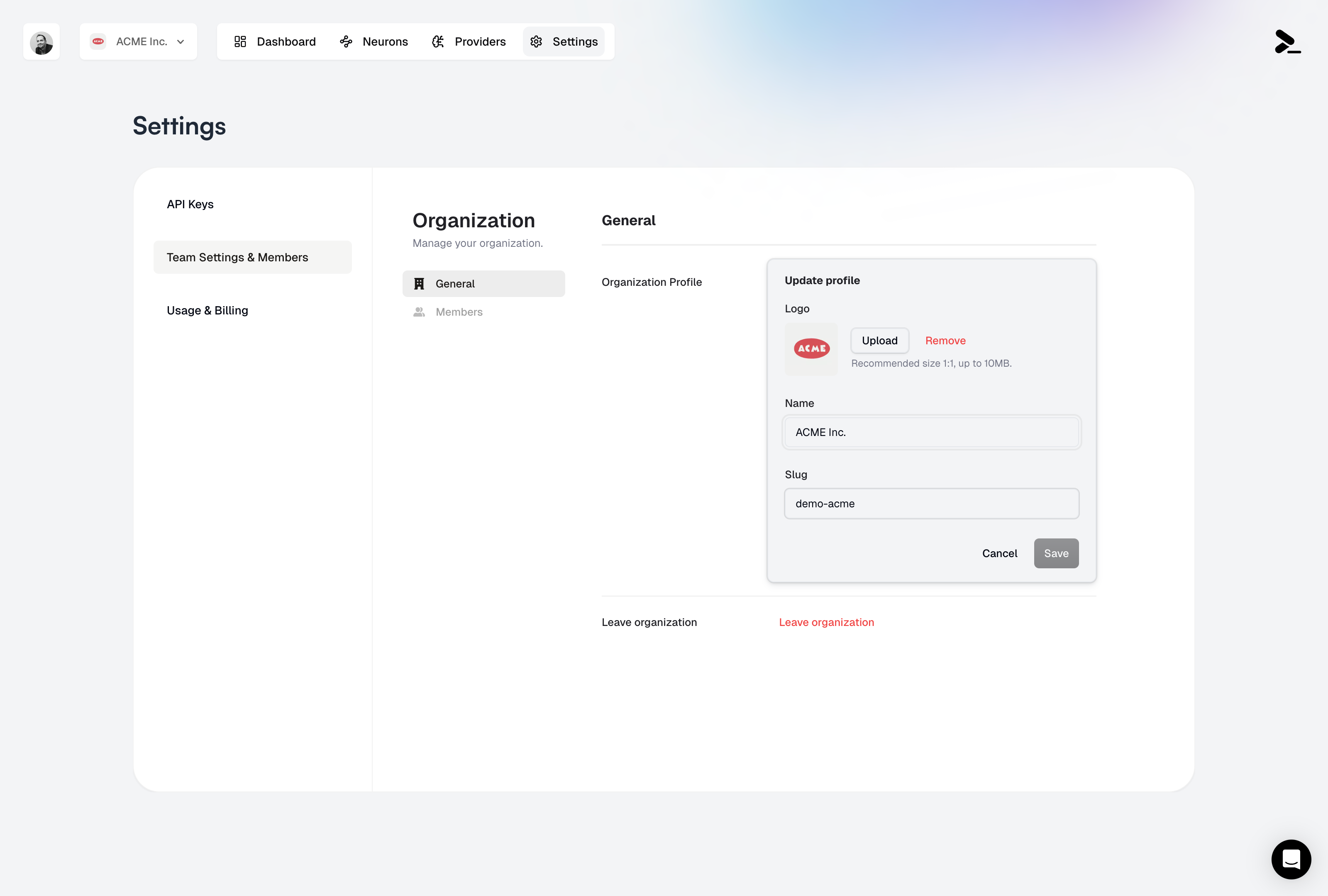

# Team Settings

Source: https://docs.prompteus.com/features/settings/team

Manage your organization profile and team members in Prompteus.

[Team Settings](https://dashboard.prompteus.com/settings/team) allow you to manage your organization's profile and team members. This includes updating your organization's information, managing your team's composition, and controlling member access.

## Organization Profile

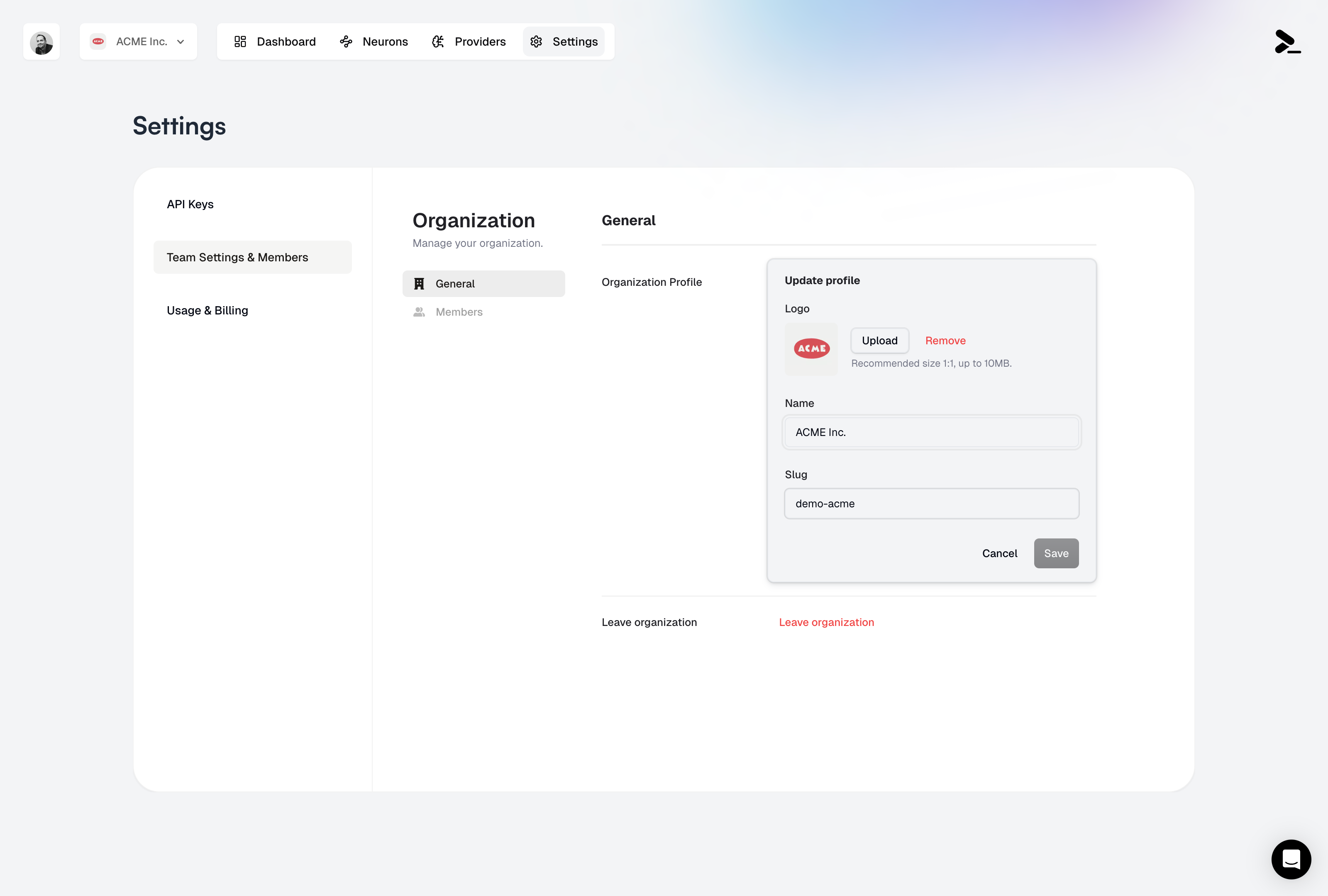

### Managing Organization Details

Your organization's profile contains essential information that identifies your team and is used throughout Prompteus. Here's what you can configure:

* **Organization Name**: This is your team's display name, visible to all team members and used in the dashboard

* **Organization Slug**: A unique identifier used in API URLs and system references. This is a critical setting as it's used in all your Neuron API endpoints

* **Logo**: Upload your organization's logo to personalize your dashboard (recommended size 1:1, up to 10MB)

The organization slug is used in all your Neuron API endpoints. Changing it will update all your API URLs, so make sure to update your applications accordingly.

## Team Management

The team management interface provides a comprehensive view of your organization's members. Here you can:

* View all team members and their current status

* See when each member joined the organization

* Manage member roles and permissions

* Remove members when they no longer need access

* Search and filter members to quickly find specific team members

### Inviting Members

Adding new team members is a simple process:

1. Click the "Invite" button in the team management interface

2. Enter the member's email address

3. Select their role from the available options

4. Send the invitation

Team member seats are billed monthly. The first member is free, and additional members are \$10/member/month. You can adjust the number of seats in the [billing settings](/features/settings/billing).

### Member Roles

Prompteus provides two distinct roles to manage team member permissions:

* **Admin**: Has full access to all organization settings and Neurons, including the ability to invite new members and modify organization details

* **Member**: Can create and manage Neurons, but cannot modify organization settings or invite new members

We're working on adding more roles in the future to provide finer-grained access control.

## Best Practices

### Organization Management

Maintaining a well-organized team structure is essential for efficient operation:

* Keep your organization slug consistent to avoid breaking API integrations

* Use a recognizable logo to help team members quickly identify your organization

* Document any slug changes to ensure all team members are aware of updates

### Team Management

Effective team management helps maintain security and efficiency:

* Regularly review team member access to ensure only active members have access

* Remove inactive members to maintain accurate billing and security

* Maintain clear role assignments to prevent confusion about permissions

## Related Resources

* [Billing Settings](/features/settings/billing) - Manage team member seats and billing

* [API Keys](/features/settings/api-keys) - Manage authentication for your Neurons

# Calling Neurons

Source: https://docs.prompteus.com/neurons/api

Learn how to call Neurons using the REST API or the TypeScript SDK.

Neurons can be called using either the REST API directly or our official TypeScript SDK. This guide explains both methods and their options.

## REST API

Each Neuron has a unique API URL in the format:

```bash

https://run.prompteus.com//

```

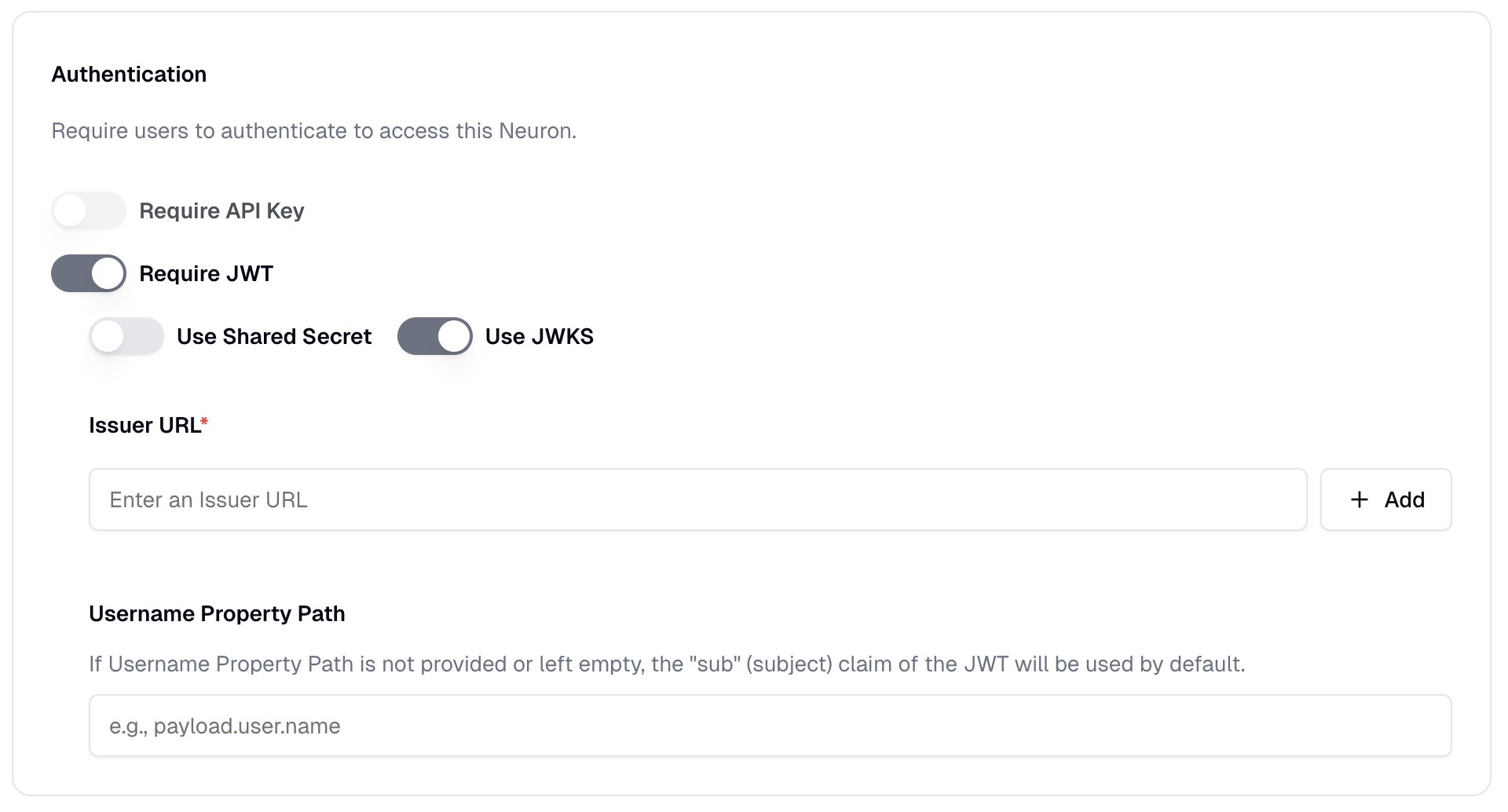

### Authentication Requirements

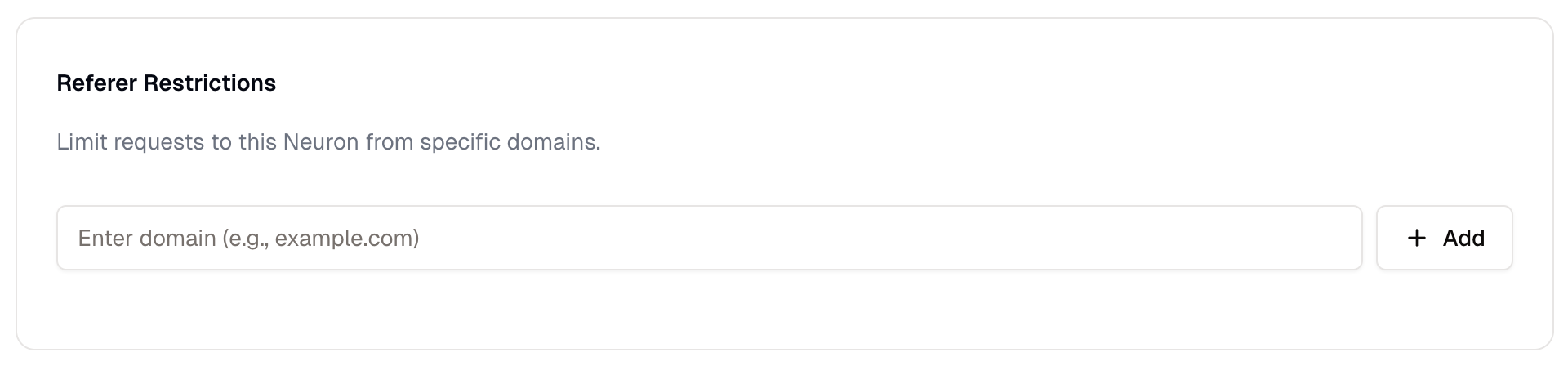

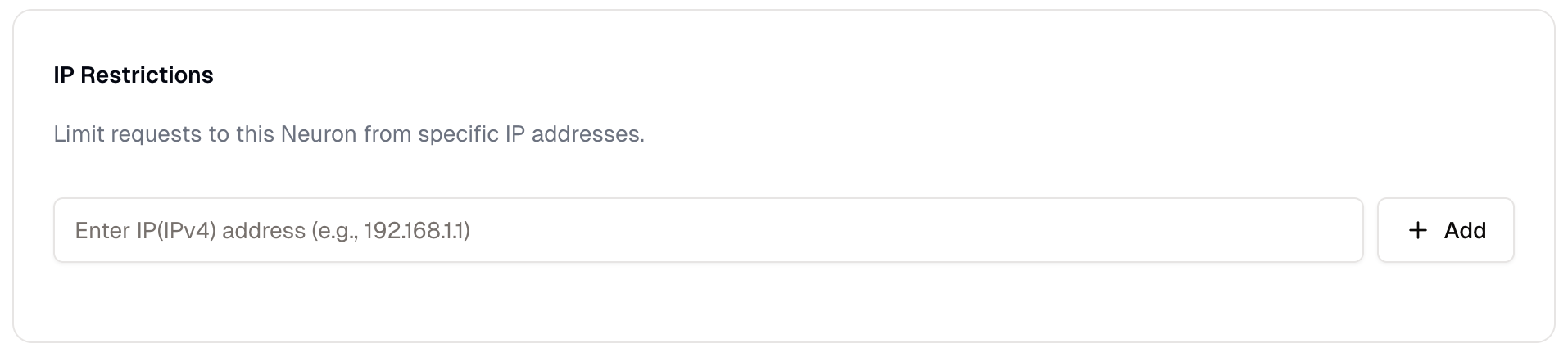

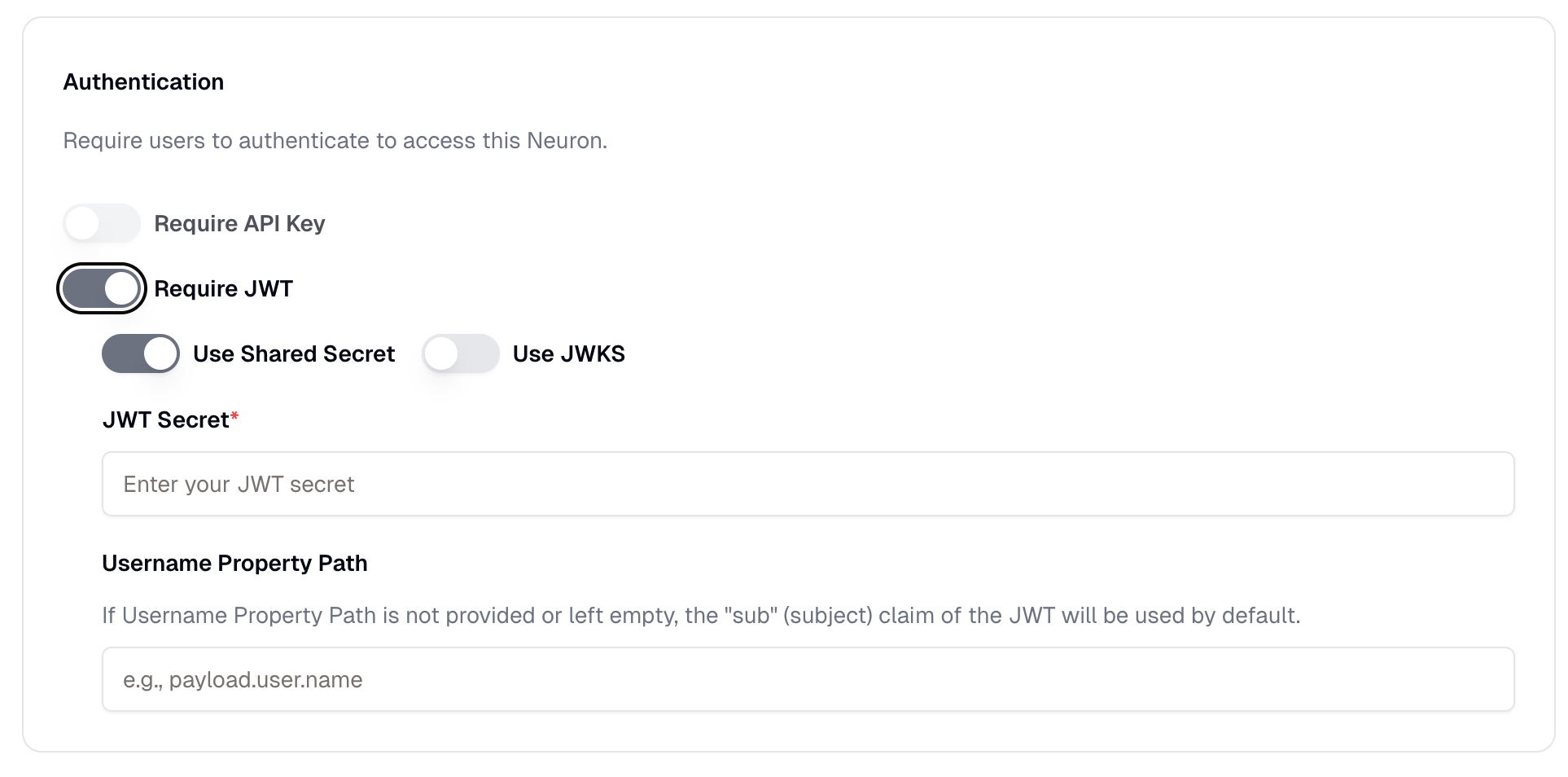

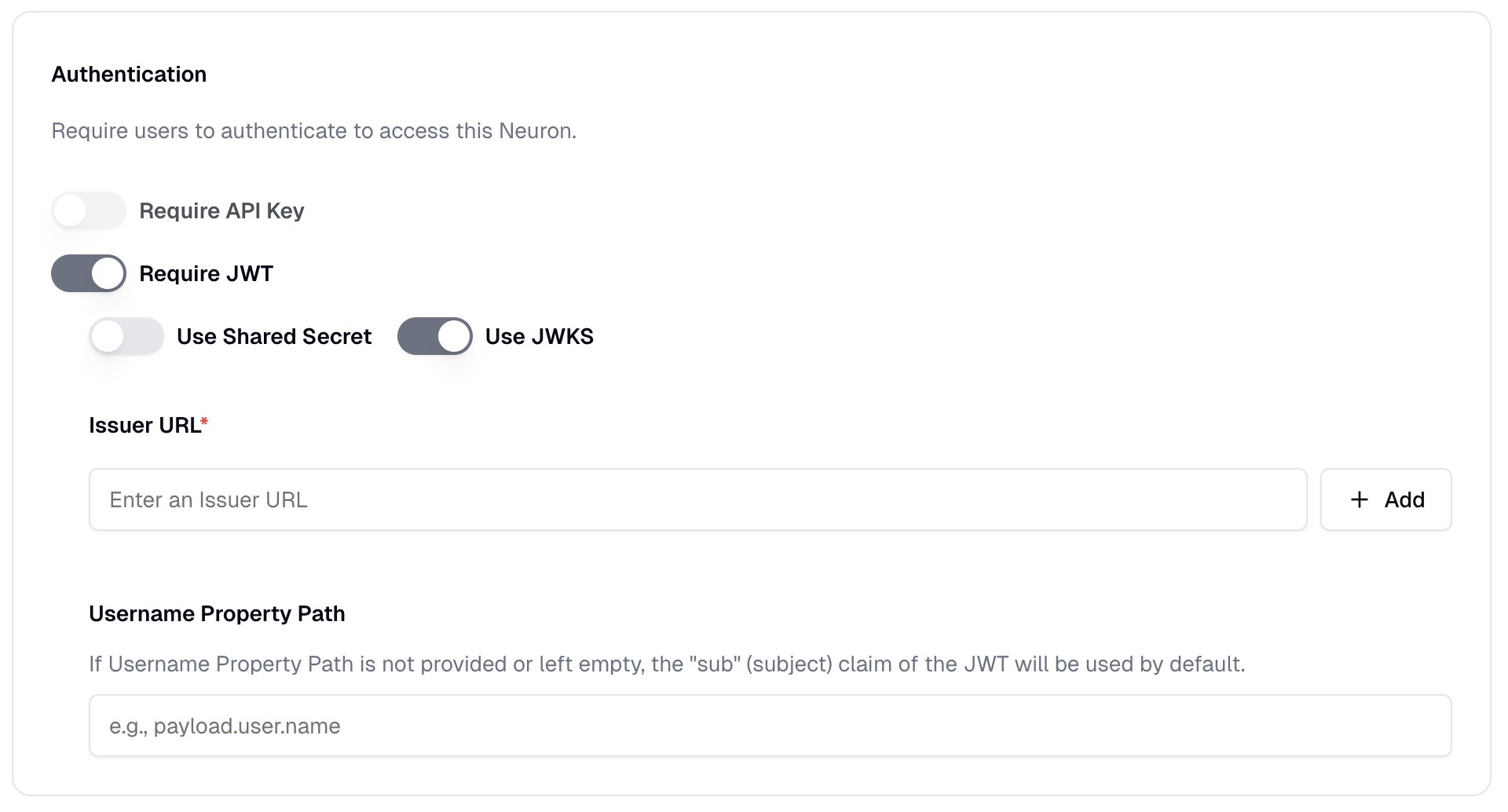

Authentication is only required if enforced by the Neuron's [access control settings](/neurons/settings/access-control). A Neuron can be configured with:

* **[Public Access](/neurons/settings/access-control#public-access)**: No authentication required

* **[Referer Restrictions](/neurons/settings/access-control#referer-restrictions)**: Only requests from specific domains are allowed

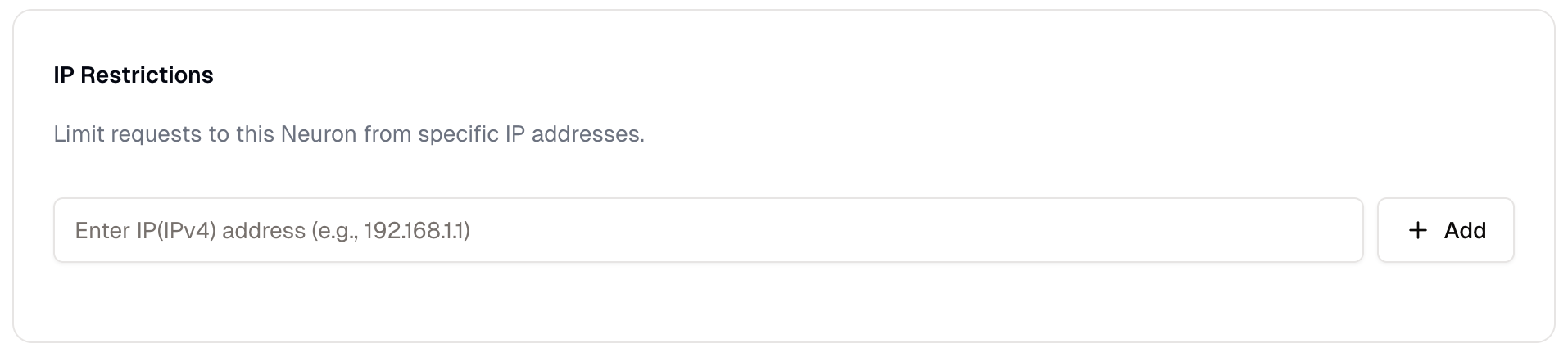

* **[IP Restrictions](/neurons/settings/access-control#ip-restrictions)**: Only requests from specific IP addresses are allowed

* **[API Key Authentication](/neurons/settings/access-control#api-key-authentication)**: Requires a Prompteus API key

* **[JWT Authentication](/neurons/settings/access-control#jwt-authentication)**: Requires a valid JWT token

When authentication is required, provide it using the `Authorization` header:

```bash

Authorization: Bearer

```

If your Neuron has public access enabled, you can skip the authentication step entirely. See the [Access Control documentation](/neurons/settings/access-control) for detailed configuration options.

### Basic Usage

To call a Neuron, send a POST request to its API URL with your input in JSON format:

```bash curl

curl -X POST https://run.prompteus.com// \

-H "Content-Type: application/json" \

-d '{"input": "What is the meaning of life?"}'

```

```javascript Fetch (JavaScript)

const response = await fetch("https://run.prompteus.com//", {

method: "POST",

body: JSON.stringify({ input: "What is the meaning of life?" }),

});

```

```python Python

import requests

response = requests.post(

"https://run.prompteus.com//",

json={"input": "What is the meaning of life?"}

)

print(response.json())

```

### Complete Examples

Here are complete examples showing how to call Neurons with error handling and authentication:

```javascript JavaScript

async function callNeuron(orgSlug, neuronSlug, input, apiKey = null) {

const url = `https://run.prompteus.com/${orgSlug}/${neuronSlug}`;

const headers = {

'Content-Type': 'application/json'

};

// Add authentication if provided

if (apiKey) {

headers['Authorization'] = `Bearer ${apiKey}`;

}

try {

const response = await fetch(url, {

method: 'POST',

headers,

body: JSON.stringify({ input }),

});

if (!response.ok) {

const error = await response.json();

throw new Error(`API Error: ${error.error} (${response.status})`);

}

const data = await response.json();

// Log if response was from cache

if (data.fromCache) {

console.log('Response served from cache');

}

return data;

} catch (error) {

console.error('Failed to call neuron:', error);

throw error;

}

}

// Example usage

async function example() {

try {

// Call without authentication

const publicResponse = await callNeuron(

'my-org',

'my-neuron',

'What is the meaning of life?'

);

console.log('Public response:', publicResponse);

// Call with authentication

const authenticatedResponse = await callNeuron(

'my-org',

'my-neuron',

'What is the meaning of life?',

'your-api-key'

);

console.log('Authenticated response:', authenticatedResponse);

// Call with cache bypass

const url = new URL('https://run.prompteus.com/my-org/my-neuron');

url.searchParams.append('bypassCache', 'true');

const noCacheResponse = await fetch(url, {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ input: 'Fresh response please' })

});

console.log('No cache response:', await noCacheResponse.json());

} catch (error) {

console.error('Example failed:', error);

}

}

```

```python Python

import requests

from typing import Optional, Dict, Any

from urllib.parse import urljoin

class NeuronClient:

def __init__(self, base_url: str = "https://run.prompteus.com"):

self.base_url = base_url

self.api_key: Optional[str] = None

def set_api_key(self, api_key: str) -> None:

"""Set the API key for authentication."""

self.api_key = api_key

def call_neuron(

self,

org_slug: str,

neuron_slug: str,

input_text: str,

bypass_cache: bool = False,

raw_output: bool = False

) -> Dict[str, Any]:

"""

Call a Neuron with the given parameters.

Args:

org_slug: Organization slug

neuron_slug: Neuron slug

input_text: Input text for the neuron

bypass_cache: Whether to bypass the cache

raw_output: Whether to return raw output

Returns:

Dict containing the neuron response

Raises:

requests.exceptions.RequestException: If the API call fails

"""

# Build URL with query parameters

url = urljoin(self.base_url, f"{org_slug}/{neuron_slug}")

params = {}

if bypass_cache:

params['bypassCache'] = 'true'

if raw_output:

params['rawOutput'] = 'true'

# Prepare headers

headers = {'Content-Type': 'application/json'}

if self.api_key:

headers['Authorization'] = f'Bearer {self.api_key}'

try:

response = requests.post(

url,

json={'input': input_text},

headers=headers,

params=params

)

response.raise_for_status()

data = response.json()

// Log if response was from cache

if data.get('fromCache'):

print('Response served from cache')

return data

except requests.exceptions.RequestException as e:

if hasattr(e.response, 'json'):

error_data = e.response.json()

print(f"API Error: {error_data.get('error')} ({e.response.status_code})")

raise

# Example usage

def example():

client = NeuronClient()

try:

// Call without authentication

public_response = client.call_neuron(

'my-org',

'my-neuron',

'What is the meaning of life?'

)

print('Public response:', public_response)

// Call with authentication

client.set_api_key('your-api-key')

auth_response = client.call_neuron(

'my-org',

'my-neuron',

'What is the meaning of life?'

)

print('Authenticated response:', auth_response)

// Call with cache bypass

no_cache_response = client.call_neuron(

'my-org',

'my-neuron',

'Fresh response please',

bypass_cache=True

)

print('No cache response:', no_cache_response)

except requests.exceptions.RequestException as e:

print('Example failed:', e)

```

### Query Parameters

The API supports the following query parameters:

| Parameter | Type | Description |

| ------------- | ------- | ------------------------------------------------------------------------------ |

| `bypassCache` | boolean | When true, forces a new execution and bypasses both exact and semantic caching |

| `rawOutput` | boolean | When true, returns the raw text output without any processing |

Example with query parameters:

```bash

https://run.prompteus.com//?bypassCache=true&rawOutput=true

```

### Authentication

If your Neuron requires authentication, you can provide it using the `Authorization` header:

```bash

Authorization: Bearer

```

See [Access Control](/neurons/settings/access-control) for more details about authentication methods.

### Response Format

A successful response will have this structure:

```typescript

{

output?: string; // The generated output

fromCache?: boolean; // Whether the response was served from cache

executionStopped?: boolean; // Whether execution was stopped prematurely

}

```

### Error Handling

Errors are returned with appropriate HTTP status codes and a JSON response:

```typescript

{

error: string; // Error message

statusCode: number; // HTTP status code

}

```

The API uses standard HTTP status codes to indicate the success or failure of requests:

| Status Code | Description | Common Causes |

| --------------------------- | -------------------------- | ------------------------------------------------------------------------------------------------------------------------------------- |

| `400` Bad Request | Invalid request parameters | • Malformed input JSON

### Managing Organization Details

Your organization's profile contains essential information that identifies your team and is used throughout Prompteus. Here's what you can configure:

* **Organization Name**: This is your team's display name, visible to all team members and used in the dashboard

* **Organization Slug**: A unique identifier used in API URLs and system references. This is a critical setting as it's used in all your Neuron API endpoints

* **Logo**: Upload your organization's logo to personalize your dashboard (recommended size 1:1, up to 10MB)

The organization slug is used in all your Neuron API endpoints. Changing it will update all your API URLs, so make sure to update your applications accordingly.

## Team Management

The team management interface provides a comprehensive view of your organization's members. Here you can:

* View all team members and their current status

* See when each member joined the organization

* Manage member roles and permissions

* Remove members when they no longer need access

* Search and filter members to quickly find specific team members

### Inviting Members

Adding new team members is a simple process:

1. Click the "Invite" button in the team management interface

2. Enter the member's email address

3. Select their role from the available options

4. Send the invitation

Team member seats are billed monthly. The first member is free, and additional members are \$10/member/month. You can adjust the number of seats in the [billing settings](/features/settings/billing).

### Member Roles

Prompteus provides two distinct roles to manage team member permissions:

* **Admin**: Has full access to all organization settings and Neurons, including the ability to invite new members and modify organization details

* **Member**: Can create and manage Neurons, but cannot modify organization settings or invite new members

We're working on adding more roles in the future to provide finer-grained access control.

## Best Practices

### Organization Management

Maintaining a well-organized team structure is essential for efficient operation:

* Keep your organization slug consistent to avoid breaking API integrations

* Use a recognizable logo to help team members quickly identify your organization

* Document any slug changes to ensure all team members are aware of updates

### Team Management

Effective team management helps maintain security and efficiency:

* Regularly review team member access to ensure only active members have access

* Remove inactive members to maintain accurate billing and security

* Maintain clear role assignments to prevent confusion about permissions

## Related Resources

* [Billing Settings](/features/settings/billing) - Manage team member seats and billing

* [API Keys](/features/settings/api-keys) - Manage authentication for your Neurons

# Calling Neurons

Source: https://docs.prompteus.com/neurons/api

Learn how to call Neurons using the REST API or the TypeScript SDK.

Neurons can be called using either the REST API directly or our official TypeScript SDK. This guide explains both methods and their options.

## REST API

Each Neuron has a unique API URL in the format:

```bash

https://run.prompteus.com//

```

### Authentication Requirements

Authentication is only required if enforced by the Neuron's [access control settings](/neurons/settings/access-control). A Neuron can be configured with:

* **[Public Access](/neurons/settings/access-control#public-access)**: No authentication required

* **[Referer Restrictions](/neurons/settings/access-control#referer-restrictions)**: Only requests from specific domains are allowed

* **[IP Restrictions](/neurons/settings/access-control#ip-restrictions)**: Only requests from specific IP addresses are allowed

* **[API Key Authentication](/neurons/settings/access-control#api-key-authentication)**: Requires a Prompteus API key

* **[JWT Authentication](/neurons/settings/access-control#jwt-authentication)**: Requires a valid JWT token

When authentication is required, provide it using the `Authorization` header:

```bash

Authorization: Bearer

```

If your Neuron has public access enabled, you can skip the authentication step entirely. See the [Access Control documentation](/neurons/settings/access-control) for detailed configuration options.

### Basic Usage

To call a Neuron, send a POST request to its API URL with your input in JSON format:

```bash curl

curl -X POST https://run.prompteus.com// \

-H "Content-Type: application/json" \

-d '{"input": "What is the meaning of life?"}'

```

```javascript Fetch (JavaScript)

const response = await fetch("https://run.prompteus.com//", {

method: "POST",

body: JSON.stringify({ input: "What is the meaning of life?" }),

});

```

```python Python

import requests

response = requests.post(

"https://run.prompteus.com//",

json={"input": "What is the meaning of life?"}

)

print(response.json())

```

### Complete Examples

Here are complete examples showing how to call Neurons with error handling and authentication:

```javascript JavaScript

async function callNeuron(orgSlug, neuronSlug, input, apiKey = null) {

const url = `https://run.prompteus.com/${orgSlug}/${neuronSlug}`;

const headers = {

'Content-Type': 'application/json'

};

// Add authentication if provided

if (apiKey) {

headers['Authorization'] = `Bearer ${apiKey}`;

}

try {

const response = await fetch(url, {

method: 'POST',

headers,

body: JSON.stringify({ input }),

});

if (!response.ok) {

const error = await response.json();

throw new Error(`API Error: ${error.error} (${response.status})`);

}

const data = await response.json();

// Log if response was from cache

if (data.fromCache) {

console.log('Response served from cache');

}

return data;

} catch (error) {

console.error('Failed to call neuron:', error);

throw error;

}

}

// Example usage

async function example() {

try {

// Call without authentication

const publicResponse = await callNeuron(

'my-org',

'my-neuron',

'What is the meaning of life?'

);

console.log('Public response:', publicResponse);

// Call with authentication

const authenticatedResponse = await callNeuron(

'my-org',

'my-neuron',

'What is the meaning of life?',

'your-api-key'

);

console.log('Authenticated response:', authenticatedResponse);

// Call with cache bypass

const url = new URL('https://run.prompteus.com/my-org/my-neuron');

url.searchParams.append('bypassCache', 'true');

const noCacheResponse = await fetch(url, {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ input: 'Fresh response please' })

});

console.log('No cache response:', await noCacheResponse.json());

} catch (error) {

console.error('Example failed:', error);

}

}

```

```python Python

import requests

from typing import Optional, Dict, Any

from urllib.parse import urljoin

class NeuronClient:

def __init__(self, base_url: str = "https://run.prompteus.com"):

self.base_url = base_url

self.api_key: Optional[str] = None

def set_api_key(self, api_key: str) -> None:

"""Set the API key for authentication."""

self.api_key = api_key

def call_neuron(

self,

org_slug: str,

neuron_slug: str,

input_text: str,

bypass_cache: bool = False,

raw_output: bool = False

) -> Dict[str, Any]:

"""

Call a Neuron with the given parameters.

Args:

org_slug: Organization slug

neuron_slug: Neuron slug

input_text: Input text for the neuron

bypass_cache: Whether to bypass the cache

raw_output: Whether to return raw output

Returns:

Dict containing the neuron response

Raises:

requests.exceptions.RequestException: If the API call fails

"""

# Build URL with query parameters

url = urljoin(self.base_url, f"{org_slug}/{neuron_slug}")

params = {}

if bypass_cache:

params['bypassCache'] = 'true'

if raw_output:

params['rawOutput'] = 'true'

# Prepare headers

headers = {'Content-Type': 'application/json'}

if self.api_key:

headers['Authorization'] = f'Bearer {self.api_key}'

try:

response = requests.post(

url,

json={'input': input_text},

headers=headers,

params=params

)

response.raise_for_status()

data = response.json()

// Log if response was from cache

if data.get('fromCache'):

print('Response served from cache')

return data

except requests.exceptions.RequestException as e:

if hasattr(e.response, 'json'):

error_data = e.response.json()

print(f"API Error: {error_data.get('error')} ({e.response.status_code})")

raise

# Example usage

def example():

client = NeuronClient()

try:

// Call without authentication

public_response = client.call_neuron(

'my-org',

'my-neuron',

'What is the meaning of life?'

)

print('Public response:', public_response)

// Call with authentication

client.set_api_key('your-api-key')

auth_response = client.call_neuron(

'my-org',

'my-neuron',

'What is the meaning of life?'

)

print('Authenticated response:', auth_response)

// Call with cache bypass

no_cache_response = client.call_neuron(

'my-org',

'my-neuron',

'Fresh response please',

bypass_cache=True

)

print('No cache response:', no_cache_response)

except requests.exceptions.RequestException as e:

print('Example failed:', e)

```

### Query Parameters

The API supports the following query parameters:

| Parameter | Type | Description |

| ------------- | ------- | ------------------------------------------------------------------------------ |

| `bypassCache` | boolean | When true, forces a new execution and bypasses both exact and semantic caching |

| `rawOutput` | boolean | When true, returns the raw text output without any processing |

Example with query parameters:

```bash

https://run.prompteus.com//?bypassCache=true&rawOutput=true

```

### Authentication

If your Neuron requires authentication, you can provide it using the `Authorization` header:

```bash

Authorization: Bearer

```

See [Access Control](/neurons/settings/access-control) for more details about authentication methods.

### Response Format

A successful response will have this structure:

```typescript

{

output?: string; // The generated output

fromCache?: boolean; // Whether the response was served from cache

executionStopped?: boolean; // Whether execution was stopped prematurely

}

```

### Error Handling

Errors are returned with appropriate HTTP status codes and a JSON response:

```typescript

{

error: string; // Error message

statusCode: number; // HTTP status code

}

```

The API uses standard HTTP status codes to indicate the success or failure of requests:

| Status Code | Description | Common Causes |

| --------------------------- | -------------------------- | ------------------------------------------------------------------------------------------------------------------------------------- |

| `400` Bad Request | Invalid request parameters | • Malformed input JSON

• Missing required fields |

| `401` Unauthorized | Authentication failed | • Invalid/expired token

• Malformed API key

• Missing authentication |

| `403` Forbidden | Access denied | • [Access control](/neurons/settings/access-control) restrictions

• IP not in allowed list

• Domain not in allowed referers |

| `404` Not Found | Resource not found | • Neuron not found

• Deployment not found

• Organization not found

• Invalid neuron configuration |

| `422` Unprocessable Entity | Validation failed | • Input validation failed

• Invalid parameter format |

| `429` Too Many Requests | Rate limit exceeded | • [Rate limits](/neurons/settings/rate-limiting) exceeded

• Account paused (billing)

• Usage limits exceeded |

| `500` Internal Server Error | Server-side error | • Unexpected server errors

• Missing deployment

• Configuration issues |

| `503` Service Unavailable | Service down | • Maintenance mode

• System overload

• Upstream service failures |

For `429` responses, check the `Retry-After` header for the number of seconds to wait before retrying:

```bash

Retry-After: 120 # Seconds until the block expires

```

### Error Handling Examples

Here's how to handle errors in different languages:

```javascript JavaScript

try {

const response = await fetch(url, {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ input: 'Hello' })

});

if (!response.ok) {

const error = await response.json();

if (response.status === 429) {

const retryAfter = response.headers.get('Retry-After');

console.log(`Rate limited. Retry after ${retryAfter} seconds`);

}

throw new Error(`API Error: ${error.error} (${response.status})`);

}

const data = await response.json();

// Process successful response...

} catch (error) {

console.error('Request failed:', error);

}

```

```python Python

try:

response = requests.post(url,

json={'input': 'Hello'},

headers={'Content-Type': 'application/json'}

)

response.raise_for_status()

data = response.json()

// Process successful response...

except requests.exceptions.HTTPError as e:

if e.response.status_code == 429:

retry_after = e.response.headers.get('Retry-After')

print(f"Rate limited. Retry after {retry_after} seconds")

error_data = e.response.json()

print(f"API Error: {error_data.get('error')} ({e.response.status_code})")

except requests.exceptions.RequestException as e:

print(f"Request failed: {e}")

```

```typescript TypeScript SDK

try {

const response = await client.callNeuron('org', 'neuron', {

input: 'Hello'

});

// Process successful response...

} catch (error) {

if ('statusCode' in error) {

// Handle specific error types

switch (error.statusCode) {

case 429:

console.error('Rate limited:', error.error);

// Implement retry logic

break;

case 401:

console.error('Authentication failed:', error.error);

// Refresh authentication

break;

default:

console.error(`API Error ${error.statusCode}:`, error.error);

}

} else {

console.error('Unexpected error:', error);

}

}

```

For production applications, we recommend implementing proper retry logic with exponential backoff, especially for `429` and `503` errors. See [Rate Limiting](/neurons/settings/rate-limiting) for more details about handling rate limits.

## TypeScript SDK

We provide an official TypeScript SDK for a more convenient integration experience.

### Installation

```bash

npm install @prompteus-ai/neuron-runner

```

### Authentication

The SDK supports both authenticated and unauthenticated calls, depending on your [Neuron's access control settings](/neurons/settings/access-control). Authentication is only required if your Neuron is configured to use API Key or JWT authentication.

If authentication is needed, you can provide it in multiple ways:

1. During client creation:

```typescript

const client = new Prompteus({ jwtOrApiKey: 'your-token' });

```

2. Using the setter method:

```typescript

client.setJwtOrApiKey('your-token');

```

3. Per request:

```typescript

await client.callNeuron('org', 'neuron', {

input: 'Hello',

jwtOrApiKey: 'your-token'

});

```

If your Neuron has [public access](/neurons/settings/access-control#public-access) enabled, you can omit the authentication token. The SDK will work without authentication for public Neurons.

### Basic Usage

```typescript

import { Prompteus } from '@prompteus-ai/neuron-runner';

// Create a client instance

const client = new Prompteus({

jwtOrApiKey: 'your-jwt-token', // Optional

baseURL: 'https://run.prompteus.com' // Optional, defaults to this value

});

// Call a neuron

try {

const response = await client.callNeuron('your-org', 'your-neuron', {

input: 'Hello, world!'

});

console.log(response);

} catch (error) {

console.error(error);

}

```

### Complete Example

Here's a comprehensive example showing different ways to use the SDK:

```typescript

import { Prompteus } from '@prompteus-ai/neuron-runner';

async function example() {

// Create a client instance

const client = new Prompteus({

jwtOrApiKey: 'your-api-key', // Optional

baseURL: 'https://run.prompteus.com' // Optional

});

try {

// Basic call

const basicResponse = await client.callNeuron(

'my-org',

'my-neuron',

{

input: 'What is the meaning of life?'

}

);

console.log('Basic response:', basicResponse);

// Call with cache bypass and raw output

const advancedResponse = await client.callNeuron(

'my-org',

'my-neuron',

{

input: 'Fresh response please',

bypassCache: true,

rawOutput: true

}

);

console.log('Advanced response:', advancedResponse);

// Call with different authentication

const authenticatedResponse = await client.callNeuron(

'my-org',

'my-neuron',

{

input: 'Hello with different auth',

jwtOrApiKey: 'different-api-key'

}

);

console.log('Authenticated response:', authenticatedResponse);

// Call with custom headers

const customResponse = await client.callNeuron(

'my-org',

'my-neuron',

{

input: 'Hello with custom headers',

headers: {

'Custom-Header': 'value'

}

}

);

console.log('Custom response:', customResponse);

} catch (error) {

if ('statusCode' in error) {

console.error(

`API Error ${error.statusCode}: ${error.error}`

);

} else {

console.error('Unexpected error:', error);

}

}

}

```

### Advanced Options

The `callNeuron` method accepts these options:

```typescript

interface CallNeuronOptions {

bypassCache?: boolean; // Force new execution, bypass caching

input?: string; // Input text for the neuron

rawOutput?: boolean; // Return raw text output

headers?: Record; // Additional headers

jwtOrApiKey?: string; // Auth token for this request

}

```

Example with options:

```typescript

const response = await client.callNeuron('org', 'neuron', {

input: 'Hello',

bypassCache: true,

rawOutput: true,

headers: {

'Custom-Header': 'value'

}

});

```

### Type Safety

The SDK provides TypeScript types for all responses:

```typescript

interface NeuronSuccessResponse {

output?: string;

fromCache?: boolean;

executionStopped?: boolean;

}

interface NeuronErrorResponse {

error: string;

statusCode: number;

}

```

### Error Handling

The SDK provides built-in error handling with TypeScript types, and exposes the [error status code and message from the API](#error-handling). Here's how to handle different error scenarios:

```typescript

try {

const response = await client.callNeuron('org', 'neuron', {

input: 'Hello'

});

// Process successful response...

} catch (error) {

if ('statusCode' in error) {

// Handle specific error types

switch (error.statusCode) {

case 429:

console.error('Rate limited:', error.error);

// Implement retry logic

break;

case 401:

console.error('Authentication failed:', error.error);

// Refresh authentication

break;

default:

console.error(`API Error ${error.statusCode}:`, error.error);

}

} else {

console.error('Unexpected error:', error);

}

}

```

The SDK throws typed errors that include both the error message and status code, making it easy to implement specific error handling logic for different scenarios.

### Complete Example

Here's a comprehensive example showing different ways to use the SDK:

```typescript

import { Prompteus } from '@prompteus-ai/neuron-runner';

async function example() {

// Create a client instance

const client = new Prompteus({

jwtOrApiKey: 'your-api-key', // Optional

baseURL: 'https://run.prompteus.com' // Optional

});

try {

// Basic call

const basicResponse = await client.callNeuron(

'my-org',

'my-neuron',

{

input: 'What is the meaning of life?'

}

);

console.log('Basic response:', basicResponse);

// Call with cache bypass and raw output

const advancedResponse = await client.callNeuron(

'my-org',

'my-neuron',

{

input: 'Fresh response please',

bypassCache: true,

rawOutput: true

}

);

console.log('Advanced response:', advancedResponse);

// Call with different authentication

const authenticatedResponse = await client.callNeuron(

'my-org',

'my-neuron',

{

input: 'Hello with different auth',

jwtOrApiKey: 'different-api-key'

}

);

console.log('Authenticated response:', authenticatedResponse);

// Call with custom headers

const customResponse = await client.callNeuron(

'my-org',

'my-neuron',

{

input: 'Hello with custom headers',

headers: {

'Custom-Header': 'value'

}

}

);

console.log('Custom response:', customResponse);

} catch (error) {

if ('statusCode' in error) {

console.error(

`API Error ${error.statusCode}: ${error.error}`

);

} else {

console.error('Unexpected error:', error);

}

}

}

```

## Related Resources

* [Introduction to Neurons](/neurons/intro) - Learn about Neurons

* [Access Control](/neurons/settings/access-control) - Configure authentication

* [Rate Limiting](/neurons/settings/rate-limiting) - Understand usage limits

* [Caching](/neurons/settings/caching) - Learn about response caching

* [Execution Logs](/neurons/logging) - Monitor Neuron calls

# Call AI Model

Source: https://docs.prompteus.com/neurons/editor/nodes/call-ai-model

Learn how to use the **Call AI Model** node to interact with various AI providers and models, with support for structured output.

The **Call AI Model** node is a powerful component that allows you to make calls to various AI providers and their models. This guide explains how to configure and use AI model calls effectively in your Neurons.

The **Call AI Model** node enables you to interact with AI models from

different providers, configure model parameters, and optionally enforce

structured output formats using JSON schemas.

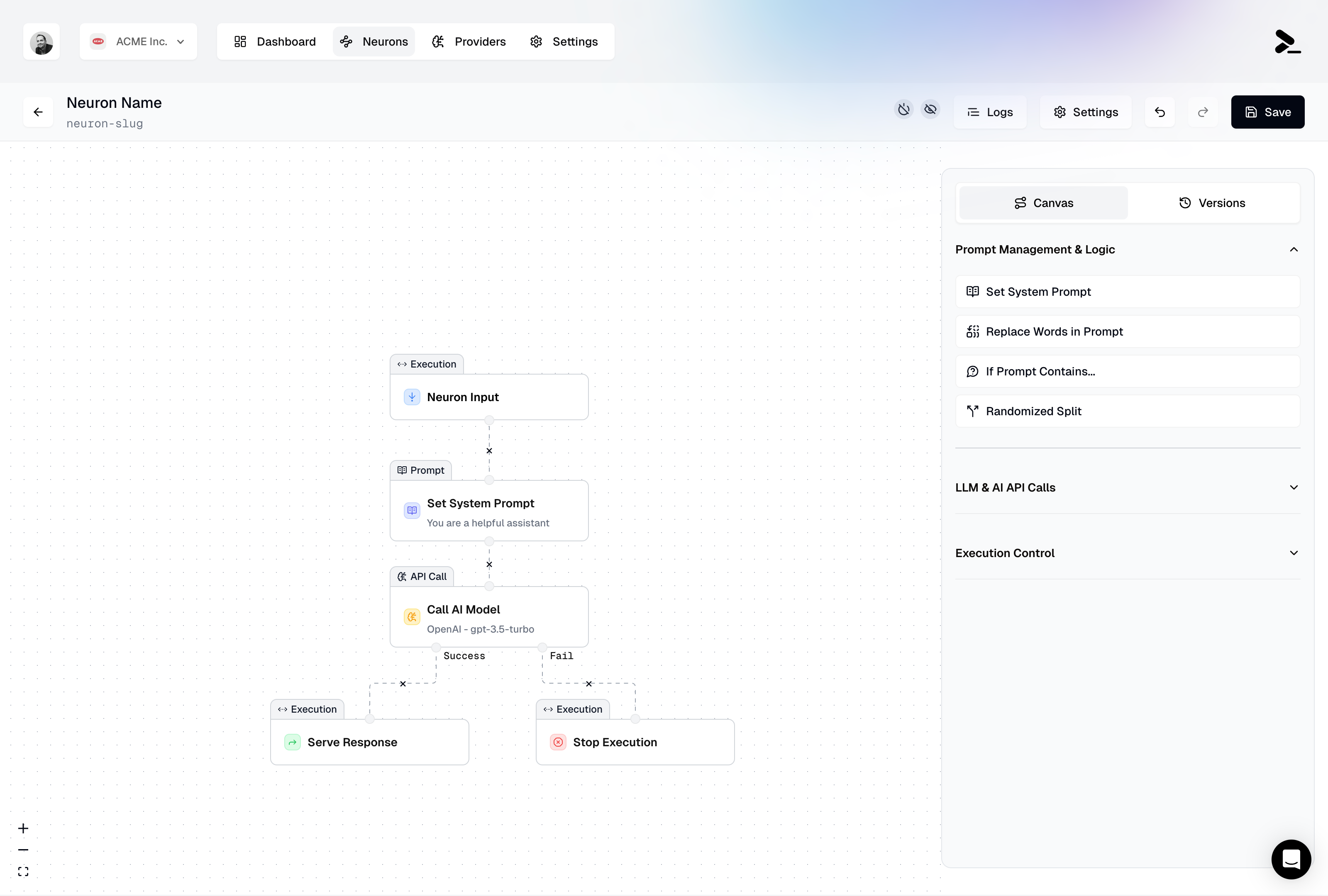

## Functionality

The **Call AI Model** node allows you to:

* Select from [configured AI providers](/features/providers)

* Choose specific models for each provider

* Configure model parameters

* Define structured output schemas (when supported)

* Chain multiple model calls for advanced workflows

## Node Properties

### AI Provider Configuration

* **AI Provider:** Select from your configured providers (OpenAI, Google, Anthropic, Cohere, Mistral, or Cloudflare Workers AI)

Providers must be configured with valid API keys in the

[Providers](/features/providers) section before they appear in the list. You can

manage multiple API keys per provider for different environments or

projects.

### MCP Server tools selection

[Using an MCP Server in a Neuron workflow](/features/mcp-servers#using-an-mcp-server-in-a-neuron-workflow)

### Model Selection

* **Model:** Choose from available models for the selected provider

* Model availability depends on the selected provider

* Cloudflare Workers AI provides access to 50+ models including Llama, Mistral, etc.

* Some models support structured output, while others don't

### Model Parameters

Available parameters and their valid ranges vary depending on the selected

model. The interface will automatically update to only show the parameters

supported by your chosen model.

Common parameters across many models include:

| Parameter | Description | Notes |

| --------------------------------- | ------------------------------------------------ | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ |

| **Max Output Tokens** | Maximum number of tokens in the model's response | Higher values allow longer responses but increase costs.

Each model has its own maximum limit. |

| **Temperature** | Controls response randomness | Lower values (0.1-0.3): More focused, deterministic responses.

Higher values (0.7-1.0): More creative, varied responses.

Recommended: 0.1-0.3 for structured output. |

| **Top P**

(Nucleus Sampling) | Controls response diversity | Works alongside temperature.

Lower values: More focused on likely tokens.

Higher values: More diverse vocabulary.

Not available in all models. |

| **Top K** | Limits token selection to K most likely tokens | Helps prevent unlikely token selections.

Only available in specific models (e.g., Google's Gemini). |

| **Frequency Penalty** | Reduces repetition based on token frequency | Higher values discourage repeating information.

Useful for diverse content.

Primarily in OpenAI models. |

| **Presence Penalty** | Penalizes tokens that have appeared at all | Higher values encourage new topics.

Helps prevent theme repetition.

Primarily in OpenAI models. |

Some models may expose additional parameters not listed here. Always check the

provider's documentation for model-specific parameter details.

### Output Configuration

* **Output Format:**

* Text (default): Regular text output

* Structured Output: JSON-formatted output following a schema

* **Schema Configuration:** (When using Structured Output)

* Inline JSON: Define the schema directly

* URL: Reference an external JSON Schema

## Advanced Usage: Chaining Models

You can create powerful workflows by chaining different models using nodes:

1. Use a more capable model for initial processing

2. Connect to a **Use Output** node

3. Change the system instructions of the second model, to ask it to structure the output into a specific format, for example: `Extract useful information from the following text`

4. Feed the result to a fast (and cheap!) model with structured output support

This pattern allows you to:

* Leverage the strengths of different models

* Enforce structured output even with models that don't natively support it

* Optimize for both quality and cost

## Tips and Best Practices

* Start with lower temperatures (0.1-0.3) when using structured output to get more consistent results

* Use Top K and Top P carefully as they can significantly impact output quality

* When using structured output:

* Ensure your schema is valid and well-defined

* Test with simple schemas before moving to complex ones

* Monitor token usage and costs through [execution logs](/neurons/logging) when chaining multiple model calls

## Testing Undeployed Versions

You can test undeployed versions of your Neurons using the **Snippets** button in the editor. This feature:

* Generates code snippets that can be used to call your Neuron in its current state

* Allows you to test revisions before deploying

* Provides example code in various programming languages

* Helps verify that your changes work as expected

This is particularly useful when:

* Making changes to model parameters

* Testing new structured output schemas

* Verifying model chaining behavior

* Debugging issues with specific configurations

# Call Neuron

Source: https://docs.prompteus.com/neurons/editor/nodes/call-neuron

Learn how to use the **Call Neuron** node to invoke other neurons and pass context between them.

The **Call Neuron** node enables you to invoke other neurons within your workflow, passing the current input and context. This allows for modular workflow design and reuse of common functionality.

The **Call Neuron** node lets you execute another neuron as part of your workflow, maintaining context and enabling workflow composition.

## Functionality

The **Call Neuron** node:

* Executes another neuron using the current input

* Passes all context from the current workflow

* Returns the called neuron's response

* Enables workflow modularity and reuse

## Usage Examples

### Scenario: Modular Processing

```mermaid

graph LR

A[Input] --> B[Call Neuron: Format Text] --> C[Call Neuron: Analyze] --> D[Serve Output]

```

In this example, separate neurons handle text formatting and analysis, making the components reusable across different workflows.

### Scenario: Conditional Neuron Selection

```mermaid

graph TD

A[Check Language] -->|English| B[Call Neuron: English Processing]

A -->|Spanish| C[Call Neuron: Spanish Processing]

B --> D[Serve Output]

C --> D

```

## Tips and Best Practices

* Design neurons to be modular and reusable

* Use meaningful neuron names for better workflow readability

* Consider creating utility neurons for common operations

* Remember that called neurons inherit the context of the calling workflow

* Avoid deep nesting of neuron calls to maintain clarity

* Use the same input/output format conventions across related neurons

## Common Use Cases

* Language-specific processing